Volatile Data Acquisition from Live Linux Systems: Part I

by nlqip

The content of this post is solely the responsibility of the author. AT&T does not adopt or endorse any of the views, positions, or information provided by the author in this article.

In the domain of digital forensics, volatile data assumes a paramount role, characterized by its ephemeral nature. Analogous to fleeting whispers in a bustling city, volatile data in Linux systems resides transiently within the Random Access Memory (RAM), encapsulating critical system configurations, active network connections, running processes, and traces of user activities. Once a Linux machine powers down, this ephemeral reservoir of information dissipates swiftly, rendering it irretrievable.

Recognizing the significance of timely incident response and the imperative of constructing a detailed timeline of events, this blog embarks on an exhaustive journey, delineating a systematic approach fortified with best practices and indispensable tools tailored for the acquisition of volatile data within the Linux ecosystem.

Conceptually, volatile data serves as a mirror reflecting the real-time operational landscape of a system. It embodies a dynamic tapestry of insights, ranging from system settings and network connectivity to program execution and user interactions. However, the transient nature of this data necessitates proactive measures to capture and analyse it before it evaporates into the digital void.

In pursuit of elucidating this intricate process, we delve into a meticulous exploration, elucidating each facet with precision and clarity. Through a curated synthesis of established methodologies and cutting-edge tools, we equip forensic practitioners with the requisite knowledge and skills to navigate the complexities of volatile data acquisition in live Linux environments.

Join us as we unravel the intricacies of digital forensics, embark on a journey of discovery, and empower ourselves with the tools and techniques necessary to unlock the secrets concealed within live Linux systems.

Before proceeding, it’s vital to grasp what volatile data encompasses and why it’s so important in investigations:

System Essentials:

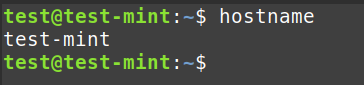

- Hostname: Identifies the system ·

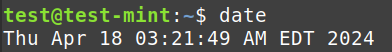

- Date and Time: Contextualizes events ·

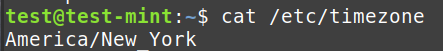

- Timezone: Helps correlate activities across regions

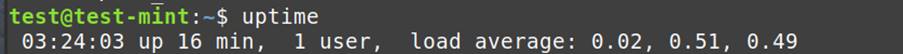

- Uptime: Reveals system state duration

Network Footprint:

- Network Interfaces: Active connections and configurations

- Open Ports: Potential entry points and services exposed

- Active Connections: Shows live communication channels

Process Ecosystem:

- Running Processes: Active programs and their dependencies

- Process Memory: May uncover hidden execution or sensitive data

Open Files:

- Accessed Files: Sheds light on user actions

- Deleted Files: Potential evidence recovery point

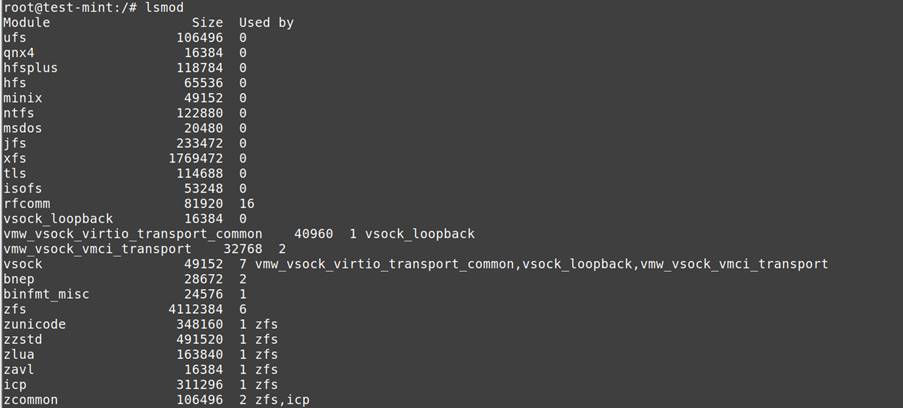

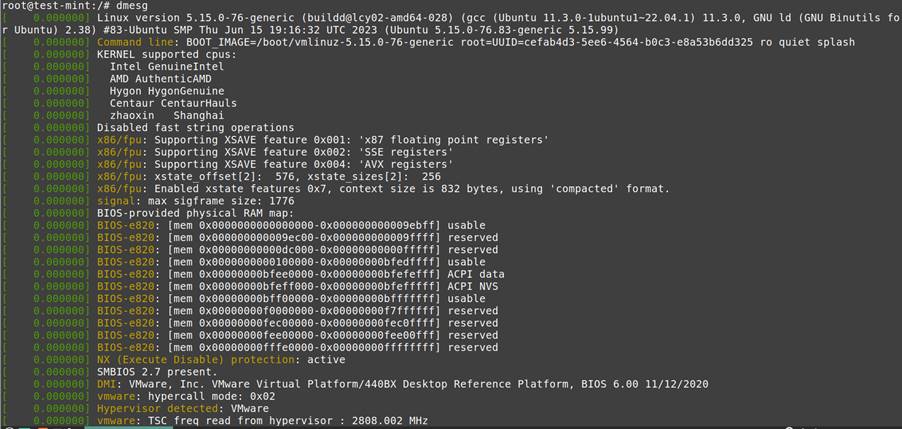

Kernel Insights

- Loaded Modules: Core extensions and potential rootkits

- Kernel Ring Buffers (dmesg): Reveals driver or hardware events

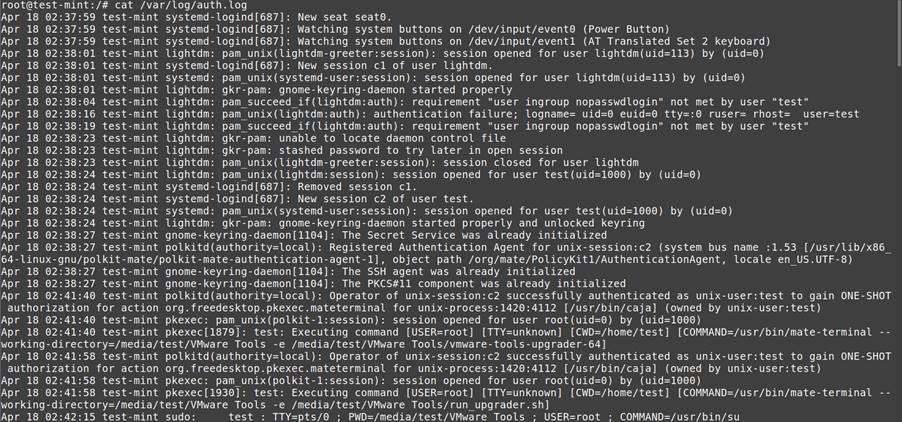

User Traces

- Login History: User activity tracking

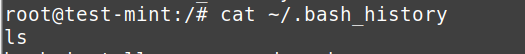

- Command History: Executed commands provide insights

Before diving into the acquisition process, it’s essential to equip yourself with the necessary tools and commands for gathering volatile data effectively, for purpose of demonstration I will be using Linux Mint:

Hostname, Date, and Time:

hostname: Retrieves the system’s hostname.

date: Displays the current date and time.

cat /etc/timezone:

Shows the system’s timezone configuration.

System Uptime:

uptime: Provides information on system uptime since the last restart.

Network Footprint:

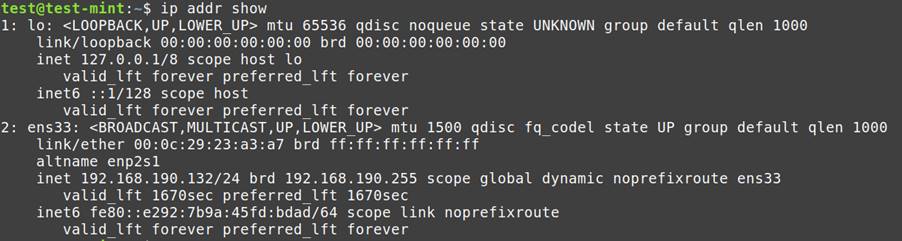

ip addr show: Lists active network interfaces and their configurations.

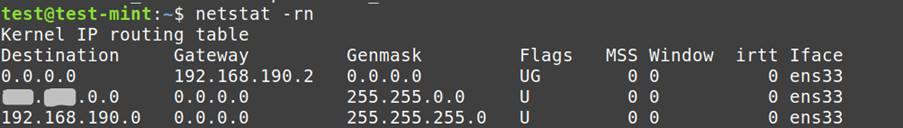

netstat -rn: Displays routing tables, aiding in understanding network connections.

Open Ports and Active Connections:

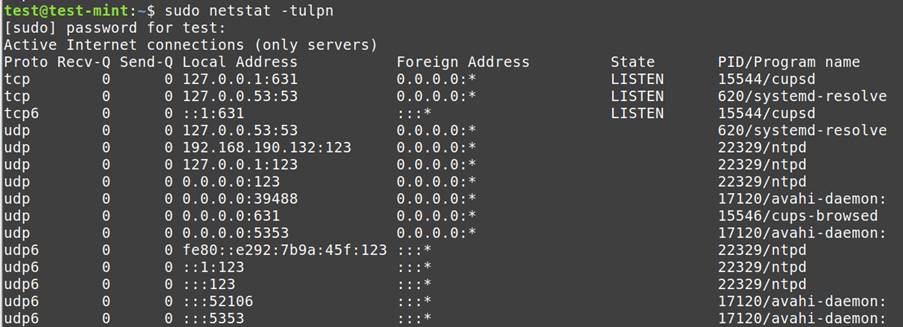

netstat -tulpn: Lists open TCP and UDP ports along with associated processes.

lsof -i -P -n | grep LISTEN: Identifies processes listening on open ports.

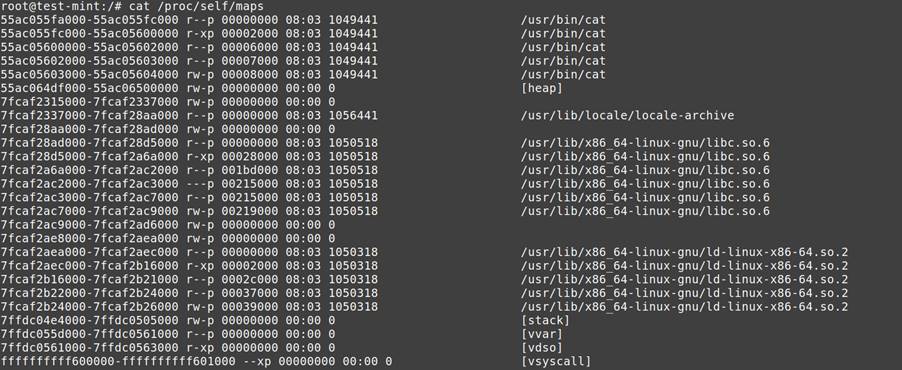

Running Processes and Memory:

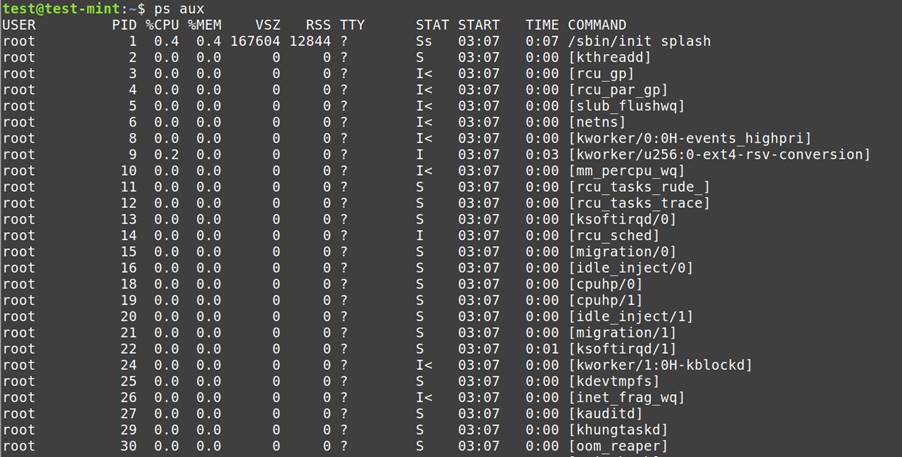

ps aux: Lists all running processes, including their details.

/proc/

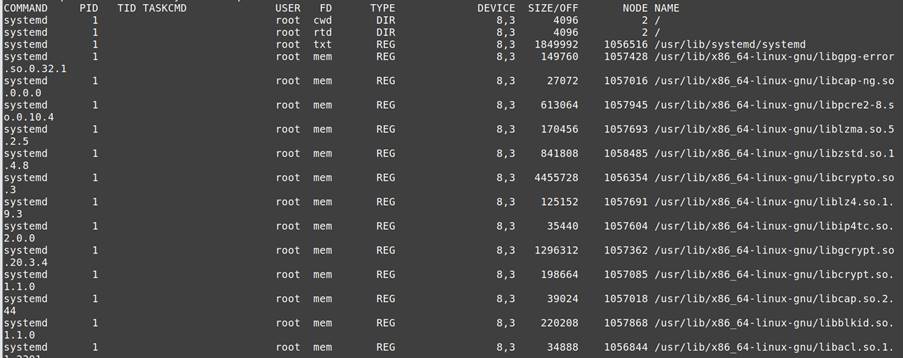

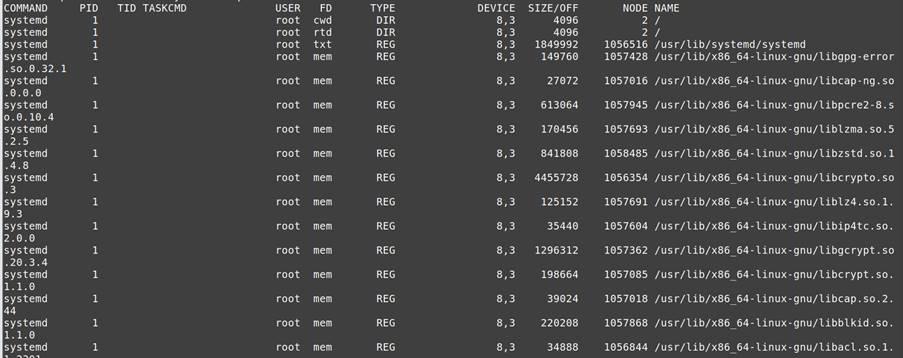

Open Files:

Kernel Insights:

User Activity:

Source link

lol

The content of this post is solely the responsibility of the author. AT&T does not adopt or endorse any of the views, positions, or information provided by the author in this article. In the domain of digital forensics, volatile data assumes a paramount role, characterized by its ephemeral nature. Analogous to fleeting whispers in a…

Recent Posts

- Arm To Seek Retrial In Qualcomm Case After Mixed Verdict

- Jury Sides With Qualcomm Over Arm In Case Related To Snapdragon X PC Chips

- Equinix Makes Dell AI Factory With Nvidia Available Through Partners

- AMD’s EPYC CPU Boss Seeks To Push Into SMB, Midmarket With Partners

- Fortinet Releases Security Updates for FortiManager | CISA