New IBM Chips Aim To Advance AI Adoption

by nlqip

‘Bringing that kind of AI capability to that kind of enterprise environment – it’s really only something IBM can do,’ IBM VP Tina Tarquinio tells CRN.

IBM unveiled its Telum II processor and Spyre accelerator chip during the annual Hot Chips conference, promising partners and solution providers new tools for bringing artificial intelligence use cases to life when the chips become available for Z and LinuxOne in 2025.

The Armonk, N.Y.-based tech giant positions the chips as helping users adopt traditional AI models, emerging large language models (LLMs) and use the ensemble AI method of combining multiple machine learning (ML) and deep learning AI models with encoder LLMs. The conference runs through Tuesday in Stanford, Calif.

“Bringing that kind of AI capability to that kind of enterprise environment – it’s really only something IBM can do,” Tina Tarquinio, IBM’s vice president of product management for Z and LinuxOne, told CRN in an interview.

[RELATED: Red Hat Partner Program Updates Include Incentives, New Digital Experience]

New IBM Chips

Phil Walker, CEO of Manhattan Beach, Calif.-based solution provider Network Solutions Provider, told CRN in an interview that he is looking to grow his IBM practice and take advantage of the vendor’s AI portfolio.

“WatsonX will be key in business advice and recommendations,” Walker said. He believes IBM will be a “key enabler for midmarket business growth.”

Tarquinio told CRN that IBM continues to see strong mainframe demand for its capabilities in sustainability, cyber resilience and of course AI. She said that mainframes have helped customers meet various data sovereignty regulations such as the European Union’s Digital Operational Resilience Act (DORA) and General Data Protection Regulation (GDPR).

“Our existing clients are leveraging the platform more and getting more capacity, which is great to see,” she said. She added that “the more our partners can understand what we’re bringing and start to talk about use cases with their end clients,” the better.

IBM’s Telum II Processor

The Telum II processor has eight high-performance cores running at 5.5 gigahertz (GHz) with 36 megabytes (MB) secondary cache per core. On-chip cache capacity total 360MB, according to IBM.

Samsung Foundry manufactures the processor and the Spyre accelerator, according to IBM. Telum II’s virtual level-four cache of 2.88 gigabytes (GB) per processor drawer promises a 40 percent increase compared to the previous processor generation. The Telum II also promises a fourfold increase in compute capacity per chip compared to the prior generation.

The Telum II chip is integrated with an input/output (I/O) acceleration data processing unit meant to improve data handling with 50 percent more I/O density.

The DPU aims to simplify system operations and improve key component performance, according to IBM.

Christian Jacobi, IBM fellow and chief technology officer of systems development, told CRN in an interview that fraud detection, insurance claims processing and code modernization and optimization are some common use cases for the new chip technology and LLMs.

IBM clients can have millions of lines of code to contend with, Jacobi said. And IBM is at work on more specific AI models – along with general-purpose ones – that should appeal to IBM customers that rely on COBOL, for example.

“Everybody sees the impact of AI,” he said. “Everybody knows, ‘AI is disrupting my industry, and if I’m not playing in that space, I’m going to get disrupted.’”

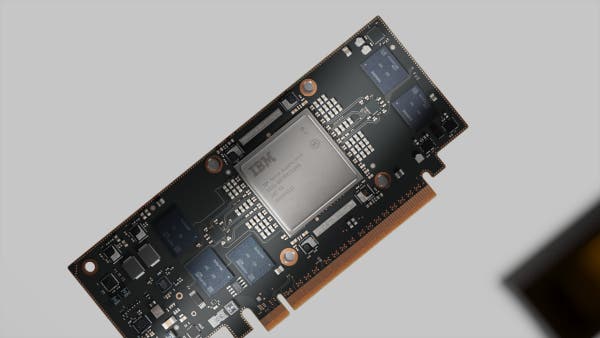

IBM’s Spyre Accelerator

The Spyre accelerator – now in tech preview – has up to a terabyte (TB) of memory and works across the eight cards of a regular I/O drawer.

IBM bills the Spyre as an add-on complement to the Telum II for scaling architecture to support the ensemble AI method.

Jacobi said that the accelerator can help improve accuracy. When a Telum II model “has a certain level of uncertainty – like I’m only 80 percent sure that this is not fraudulent, for example – then you kick the transaction to the Spyre accelerator, where you could have a larger model running that creates additional accuracy.”

“You’re getting the benefits of the speed and energy efficiency of the small model. And then … you use the bigger model to validate that,” he said.

Spyre attaches with a 75-watt Peripheral Component Interconnect Express (PCIe) adapter. The accelerator should support AI model workloads across the mainframe while consuming 75 watts or less of energy per card. Each chip has 32 compute cores that support int4, int8, fp8, and fp16 datatypes, according to IBM.

Source link

lol

‘Bringing that kind of AI capability to that kind of enterprise environment – it’s really only something IBM can do,’ IBM VP Tina Tarquinio tells CRN. IBM unveiled its Telum II processor and Spyre accelerator chip during the annual Hot Chips conference, promising partners and solution providers new tools for bringing artificial intelligence use cases…

Recent Posts

- Arm To Seek Retrial In Qualcomm Case After Mixed Verdict

- Jury Sides With Qualcomm Over Arm In Case Related To Snapdragon X PC Chips

- Equinix Makes Dell AI Factory With Nvidia Available Through Partners

- AMD’s EPYC CPU Boss Seeks To Push Into SMB, Midmarket With Partners

- Fortinet Releases Security Updates for FortiManager | CISA