Audio Deepfake Attacks: Widespread And ‘Only Going To Get Worse’

by nlqip

A cybersecurity researcher tells CRN that his own family was recently targeted with a convincing voice-clone scam.

While audio deepfake attacks against businesses have rapidly become commonplace in recent years, one cybersecurity researcher says it’s increasingly clear that voice-clone scams are also targeting private individuals. He knows this first-hand, in fact.

The researcher, Kyle Wilhoit of Palo Alto Networks’ Unit 42 division, told CRN that his own family was recently targeted with just such a scam.

[Related: Which Side Wins With GenAI: Cybercrime Or Cyberdefense?]

In late July, Wilhoit said, his wife received a call from a man claiming he’d been in a car accident with their daughter, who is a college student. The caller urgently demanded to be paid for the damages to his vehicle.

Then the caller said he would put their daughter on the phone.

“She was crying, sounded very distraught,” Wilhoit said, recalling what he was told by his wife about the call. “It sounded just like my daughter.”

Except, their daughter had not been in any accident and was still safely at college, he said.

Once this came to light, Wilhoit said his wife hung up on the caller, and they didn’t hear from the man again.

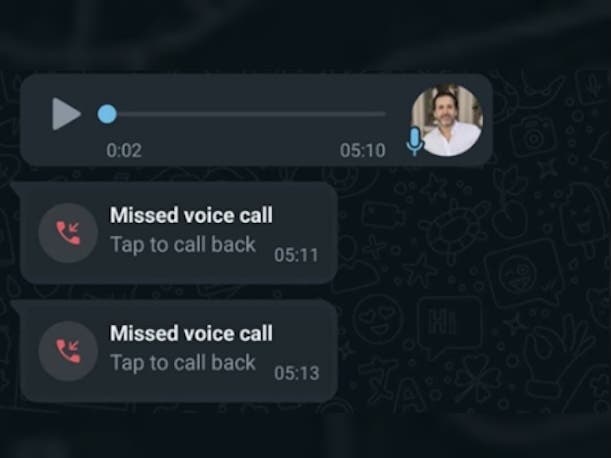

As Wilhoit soon found out, his daughter had actually received strange calls during each of the previous three days, which he now believes were used to record audio samples of her voice.

“It was targeted in nature—they had called her directly at her workplace on three different occasions,” said Wilhoit, technical director for threat research at Palo Alto Networks’ Unit 42.

Ultimately, the incident shows that audio deepfake attacks are now so common “to the point where it’s even impacting myself as a cybersecurity researcher,” he said. “The prevalence is massive.”

Experts say that AI-generated impersonation has become a widespread problem since simple-to-use voice cloning became available more than a year ago. When it comes to targeting individuals, scammers are in fact known to utilize deepfakes that sound like family members of the intended victims, The Washington Post has reported.

On the business side, according to a report released this week by identity verification vendor Regula, nearly half of surveyed businesses say they’ve been targeted with audio deepfake attacks over the past two years.

In one notable incident, password management vendor LastPass disclosed in April that attackers used a deepfake audio clip of the company’s CEO to target an employee.

‘Much More Accessible’

There’s no doubt that voice-clone technologies have advanced to a point where they are both easy to use and able to produce fake audio that is convincing, according to Sophos Global Field CTO Chester Wisniewski.

By contrast, “a year ago, [the cloning technology] wasn’t good enough without a lot of effort,” Wisniewski said.

Recently, however, Wisniewski said he was able to create a convincing audio deepfake of himself in five minutes.

“Now that that is possible, that makes it much more accessible to the criminals,” he told CRN.

Optiv AI expert Randy Lariar pointed to the reported case earlier this year of a video deepfake that led a Hong Kong company to approve a $25 million funds transfer to a scammer.

Without question, “the ability to inexpensively clone and impersonate someone else for social engineering just gets better and better,” said Lariar, big data and analytics practice director at Denver-based Optiv, No. 25 on CRN’s Solution Provider 500 for 2024.

Ultimately, “I think we are all, myself included, underestimating the capabilities of AI,” he said. “It’s that much of a change in the landscape.”

A ‘Terrifying’ Issue

MacKenzie Brown, vice president of security at managed detection and response provider Blackpoint Cyber, said she expects threat actors will seek to combine other tactics to make voice-clone attacks even more successful.

For instance, since voicemails are often now automatically transcribed and sent to the recipient’s email inbox, an attacker who has breached an inbox might be able to view voicemail transcripts to figure out how to develop an even more convincing deepfake audio clip to target that user.

The arrival of these technologies has unquestionably made social engineering and phishing scams into a “more terrifying” issue for organizations and individuals alike, Brown said.

Implement Safe Words

For Wilhoit and his family, being targeted with a convincing voice-clone scam was indeed a major jolt. The experience has led them to implement a safe word that family members can utilize in the event something like this happens again.

“That’s one recommendation I would give—ask direct questions and have a safe word,” he said.

Unfortunately, it’s likely that many more people will be facing a similar scenario in coming years.

“The barrier to entry, from a technology perspective, is very low. The cost is very low. Putting together an attack is extremely fast and extremely easy,” Wilhoit said. “I think this is only going to get worse.”

Source link

lol

A cybersecurity researcher tells CRN that his own family was recently targeted with a convincing voice-clone scam. While audio deepfake attacks against businesses have rapidly become commonplace in recent years, one cybersecurity researcher says it’s increasingly clear that voice-clone scams are also targeting private individuals. He knows this first-hand, in fact. The researcher, Kyle Wilhoit…

Recent Posts

- Arm To Seek Retrial In Qualcomm Case After Mixed Verdict

- Jury Sides With Qualcomm Over Arm In Case Related To Snapdragon X PC Chips

- Equinix Makes Dell AI Factory With Nvidia Available Through Partners

- AMD’s EPYC CPU Boss Seeks To Push Into SMB, Midmarket With Partners

- Fortinet Releases Security Updates for FortiManager | CISA