OpenAI confirms threat actors use ChatGPT to write malware

by nlqip

OpenAI has disrupted over 20 malicious cyber operations abusing its AI-powered chatbot, ChatGPT, for debugging and developing malware, spreading misinformation, evading detection, and conducting spear-phishing attacks.

The report, which focuses on operations since the beginning of the year, constitutes the first official confirmation that generative mainstream AI tools are used to enhance offensive cyber operations.

The first signs of such activity were reported by Proofpoint in April, who suspected TA547 (aka “Scully Spider”) of deploying an AI-written PowerShell loader for their final payload, Rhadamanthys info-stealer.

Last month, HP Wolf researchers reported with high confidence that cybercriminals targeting French users were employing AI tools to write scripts used as part of a multi-step infection chain.

The latest report by OpenAI confirms the abuse of ChatGPT, presenting cases of Chinese and Iranian threat actors leveraging it to enhance the effectiveness of their operations.

Use of ChatGPT in real attacks

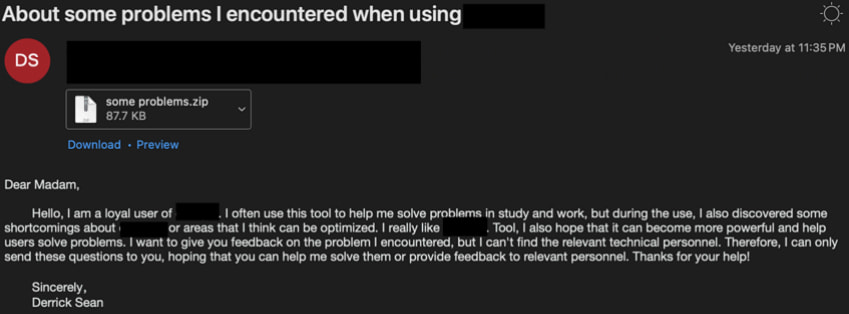

The first threat actor outlined by OpenAI is ‘SweetSpecter,’ a Chinese adversary first documented by Cisco Talos analysts in November 2023 as a cyber-espionage threat group targeting Asian governments.

OpenAI reports that SweetSpecter targeted them directly, sending spear phishing emails with malicious ZIP attachments masked as support requests to the personal email addresses of OpenAI employees.

If opened, the attachments triggered an infection chain, leading to SugarGh0st RAT being dropped on the victim’s system.

Source: Proofpoint

Upon further investigation, OpenAI found that SweetSpecter was using a cluster of ChatGPT accounts that performed scripting and vulnerability analysis research with the help of the LLM tool.

The threat actors utilized ChatGPT for the following requests:

| Activity | LLM ATT&CK Framework Category |

| Asking about vulnerabilities in various applications. | LLM-informed reconnaissance |

| Asking how to search for specific versions of Log4j that are vulnerable to the critical RCE Log4Shell. | LLM-informed reconnaissance |

| Asking about popular content management systems used abroad. | LLM-informed reconnaissance |

| Asking for information on specific CVE numbers. | LLM-informed reconnaissance |

| Asking how internet-wide scanners are made. | LLM-informed reconnaissance |

| Asking how sqlmap would be used to upload a potential web shell to a target server. | LLM-assisted vulnerability research |

| Asking for help finding ways to exploit infrastructure belonging to a prominent car manufacturer. | LLM-assisted vulnerability research |

| Providing code and asking for additional help using communication services to programmatically send text messages. | LLM-enhanced scripting techniques |

| Asking for help debugging the development of an extension for a cybersecurity tool. | LLM-enhanced scripting techniques |

| Asking for help to debug code that’s part of a larger framework for programmatically sending text messages to attacker specified numbers. | LLM-aided development |

| Asking for themes that government department employees would find interesting and what would be good names for attachments to avoid being blocked. | LLM-supported social engineering |

| Asking for variations of an attacker-provided job recruitment message. | LLM-supported social engineering |

The second case concerns the Iranian Government Islamic Revolutionary Guard Corps (IRGC)-affiliated threat group ‘CyberAv3ngers,’ known for targeting industrial systems in critical infrastructure locations in Western countries.

OpenAI reports that accounts associated with this threat group asked ChatGPT to produce default credentials in widely used Programmable Logic Controllers (PLCs), develop custom bash and Python scripts, and obfuscate code.

The Iranian hackers also used ChatGPT to plan their post-compromise activity, learn how to exploit specific vulnerabilities, and choose methods to steal user passwords on macOS systems, as listed below.

| Activity | LLM ATT&CK Framework Category |

| Asking to list commonly used industrial routers in Jordan. | LLM-informed reconnaissance |

| Asking to list industrial protocols and ports that can connect to the Internet. | LLM-informed reconnaissance |

| Asking for the default password for a Tridium Niagara device. | LLM-informed reconnaissance |

| Asking for the default user and password of a Hirschmann RS Series Industrial Router. | LLM-informed reconnaissance |

| Asking for recently disclosed vulnerabilities in CrushFTP and the Cisco Integrated Management Controller as well as older vulnerabilities in the Asterisk Voice over IP software. | LLM-informed reconnaissance |

| Asking for lists of electricity companies, contractors and common PLCs in Jordan. | LLM-informed reconnaissance |

| Asking why a bash code snippet returns an error. | LLM enhanced scripting techniques |

| Asking to create a Modbus TCP/IP client. | LLM enhanced scripting techniques |

| Asking to scan a network for exploitable vulnerabilities. | LLM assisted vulnerability research |

| Asking to scan zip files for exploitable vulnerabilities. | LLM assisted vulnerability research |

| Asking for a process hollowing C source code example. | LLM assisted vulnerability research |

| Asking how to obfuscate vba script writing in excel. | LLM-enhanced anomaly detection evasion |

| Asking the model to obfuscate code (and providing the code). | LLM-enhanced anomaly detection evasion |

| Asking how to copy a SAM file. | LLM-assisted post compromise activity |

| Asking for an alternative application to mimikatz. | LLM-assisted post compromise activity |

| Asking how to use pwdump to export a password. | LLM-assisted post compromise activity |

| Asking how to access user passwords in MacOS. | LLM-assisted post compromise activity |

The third case highlighted in OpenAI’s report concerns Storm-0817, also Iranian threat actors.

That group reportedly used ChatGPT to debug malware, create an Instagram scraper, translate LinkedIn profiles into Persian, and develop a custom malware for the Android platform along with the supporting command and control infrastructure, as listed below.

| Activity | LLM ATT&CK Framework Category |

| Seeking help debugging and implementing an Instagram scraper. | LLM-enhanced scripting techniques |

| Translating LinkedIn profiles of Pakistani cybersecurity professionals into Persian. | LLM-informed reconnaissance |

| Asking for debugging and development support in implementing Android malware and the corresponding command and control infrastructure. | LLM-aided development |

The malware created with the help of OpenAI’s chatbot can steal contact lists, call logs, and files stored on the device, take screenshots, scrutinize the user’s browsing history, and get their precise position.

“In parallel, STORM-0817 used ChatGPT to support the development of server side code necessary to handle connections from compromised devices,” reads the Open AI report.

“This allowed us to see that the command and control server for this malware is a WAMP (Windows, Apache, MySQL & PHP/Perl/Python) setup and during testing was using the domain stickhero[.]pro.”

All OpenAI accounts used by the above threat actors were banned, and the associated indicators of compromise, including IP addresses, have been shared with cybersecurity partners.

Although none of the cases described above give threat actors new capabilities in developing malware, they constitute proof that generative AI tools can make offensive operations more efficient for low-skilled actors, assisting them in all stages, from planning to execution.

Source link

lol

OpenAI has disrupted over 20 malicious cyber operations abusing its AI-powered chatbot, ChatGPT, for debugging and developing malware, spreading misinformation, evading detection, and conducting spear-phishing attacks. The report, which focuses on operations since the beginning of the year, constitutes the first official confirmation that generative mainstream AI tools are used to enhance offensive cyber operations.…

Recent Posts

- Arm To Seek Retrial In Qualcomm Case After Mixed Verdict

- Jury Sides With Qualcomm Over Arm In Case Related To Snapdragon X PC Chips

- Equinix Makes Dell AI Factory With Nvidia Available Through Partners

- AMD’s EPYC CPU Boss Seeks To Push Into SMB, Midmarket With Partners

- Fortinet Releases Security Updates for FortiManager | CISA