Outrun By Nvidia, Intel Pitches Gaudi 3 Chips For Cost-Effective AI Systems

by nlqip

In presentations and in interviews with CRN, Intel executives elaborate on the chipmaker’s strategy to market its Gaudi 3 accelerator chips to businesses who need cost-effective AI systems backed by open ecosystems after CEO Pat Gelsinger admitted that Intel won’t be ‘competing anytime soon for high-end training’ against rivals like Nvidia.

Instead, the semiconductor giant believes its Gaudi 3 chips will find traction with businesses who need cost-effective AI systems for training and, to a much greater extent, inferencing smaller, task-based models and open-source models.

[Related: Analysis: How Nvidia Surpassed Intel In Annual Revenue And Won The AI Crown]

Intel outlined its strategy for Gaudi 3 when it announced last month that the accelerator chip, a key product in CEO Pat Gelsinger’s turnaround plan, will debut in servers from Dell Technologies and Supermicro in October. General availability is expected later in the fourth quarter, a delay from the third-quarter release window Intel gave in April.

Hewlett Packard Enterprise is expected to follow with its own Gaudi 3 system in December. System availability from other OEMs, including Lenovo, was not disclosed.

On the cloud front, Gaudi 3 will be available through services hosted on IBM Cloud early next year and sooner on Intel Tiber AI Cloud, the chipmaker’s recently rebranded cloud service that is meant to support commercial applications.

At a recent press event, Intel homed in on its competitive messaging around Gaudi 3, saying that it delivers a “price performance advantage,” particularly around inference, against Nvidia’s H100 GPU, which debuted in 2022, played a major role in Nvidia’s ascent as a data center vendor and was succeeded earlier this year by the memory-rich H200.

When it comes to an 8-billion-parameter Llama 3 model, Gaudi 3 is roughly 9 percent faster than the H100 but provides 80 percent better performance-per-dollar, according to calculations by Intel. For a 70-billion-parameter Llama 2 model, the chip is 19 percent faster but improves performance-per-dollar by roughly two times, the company said.

Intel has previously said that Gaudi 3’s power efficiency is on par with the H100 when it comes to inferencing large language models (LLMs) with an output of 128 tokens, but it has a performance-per-watt advantage when that output grows to 1,024 tokens. It has also said that Gaudi 3 has faster LLM inference throughput than the H200 with the same large token output. Tokens typically represent words or characters.

While Gaudi 3 was able to outperform the H100 and H200 on these two LLM inference throughput tests, the chip’s overall throughput for floating-point operations across 16-bit and 8-bit formats fell short of the H100’s capabilities.

For bfloat16 (FB16) and 8-bit floating-point precision matrix math, Gaudi 3 can perform 1,835 trillion floating point operations per second (TFLOPS) for each format while the H100 can reach 1,979 TFLOPS for BF16 and 3,958 TFLOPS for FP8.

But even if the chipmaker can claim any advantage over the H100 or H200, Intel has to contend with the fact that Nvidia has accelerated to an annual chip release cadence, and this means the rival plans to debut by the end of the year its next-generation Blackwell GPUs, for which Nvidia has promised will be more powerful and efficient.

Intel is also facing another rival that has become increasingly competitive in the AI computing space: AMD. The opposing chip designer last week said its forthcoming Instinct MI325X GPU can outperform Nvidia’s H200 on inference workloads and vowed that its next-generation MI350 chips will improve performance by magnitudes.

Why Intel Thinks It Can Find A Way Into The AI Chip Market

Knowing the battle ahead, Intel is not intending to go head-to-head with Nvidia’s GPUs in the race to enable the fastest AI systems for training massive AI models, like OpenAI’s 1.8 trillion-parameter GPT-4 Mixture-of-Experts model.

In an interview with CRN, Anil Nanduri, the head of Intel’s AI acceleration office, said purchasing decisions around infrastructure for training AI models so far have been mainly made based on performance and not cost.

That trend has largely benefited Nvidia so far, and it has allowed the company to build a groundswell of support among AI developers. In turn, developers have made significant investments in Nvidia’s full stack of technologies to build out their applications, raising the bar for moving development to another platform.

“And if you think in that context, there is an incumbent benefit, where all the frontier model research, all the capabilities are developed on the de facto platform where you’re building it, you’re researching it, and you’re, in essence, subconsciously optimizing it as well. And then to make that port over [to a different platform] is work,” Nanduri said.

It may make sense, at least for now, for hyperscalers like Meta and Microsoft to invest significant sums of money in ultrapowerful AI data center infrastructure to push cutting-edge capabilities without an immediate need to generate profits. OpenAI, for instance, is expected to generate $5 billion in losses—some of which is tied to services—this year on $3.6 billion in revenue, CNBC and other publications reported last month.

But many businesses cannot afford to make such investments and accept such losses. They also likely don’t need massive AI models that can answer questions on topics that go far beyond their focus areas, according to Nanduri.

“The world we are starting to see is people are questioning the [return on investment], the cost, the power and everything else. This is where—I don’t have a crystal ball—but the way we think about it is, do you want one giant model that knows it all?” Nanduri said.

Intel believes the answer is “no” for many businesses and that they will instead opt for smaller, task-based models that have lighter performance needs.

Nanduri said while Gaudi 3 is “not catching up” to Nvidia’s latest GPU from a head-to-head performance perspective, the accelerator chip is well-suited to enable economical systems for running task-based models and open-source models on behalf of enterprises, which is where the company has “traditional strengths.”

“For the enterprises where we have a lot of strong relationships, they’re not the first rapid adopters of AI. They’re actually very thoughtful about how they’re going to deploy it. So I think that’s what’s driving us to this assessment of what is the product market fit and to our customer base, where we traditionally have strong relationships,” he said.

Justin Hotard, an HPE veteran who became leader of Intel’s Data Center and AI Group at the beginning of the year, said he and other leaders settled on this strategy after hearing from enterprise customers who want more economical AI systems, which has helped inform Intel’s belief that there could be a big market for such products.

“We feel like where we are with the product, the customers that are engaged, the problems we’re solving, that’s our swim lane. The bet is that the market will open up in that space, and there’ll be a bunch of people building their own inferencing solutions,” he said in a response to a CRN question at the press event.

At a financial conference in August, Gelsinger admitted that the company isn’t going to be “competing anytime soon for high-end training” because its competitors are “so far ahead,” so it’s betting on AI deployments with enterprises and at the edge.

“Today, 70 percent of computing is done in the cloud. 80-plus percent of data remains on-prem or in control of the enterprise. That’s a pretty stark contrast when you think about it. So the mission-critical business data is over here, and all of the enthusiasm on AI is over here. And I will argue that that data in the last 25 years of cloud hasn’t moved to the cloud, and I don’t think it’s going to move to the cloud,” he said at the Deutsche Bank analyst conference.

Intel Bets On Open Ecosystem Approach

Intel also hopes to win over customers with Gaudi 3 by embracing an open ecosystem approach across hardware infrastructure, software platforms and applications, which executives said contrasts with Nvidia’s “walled garden” strategy.

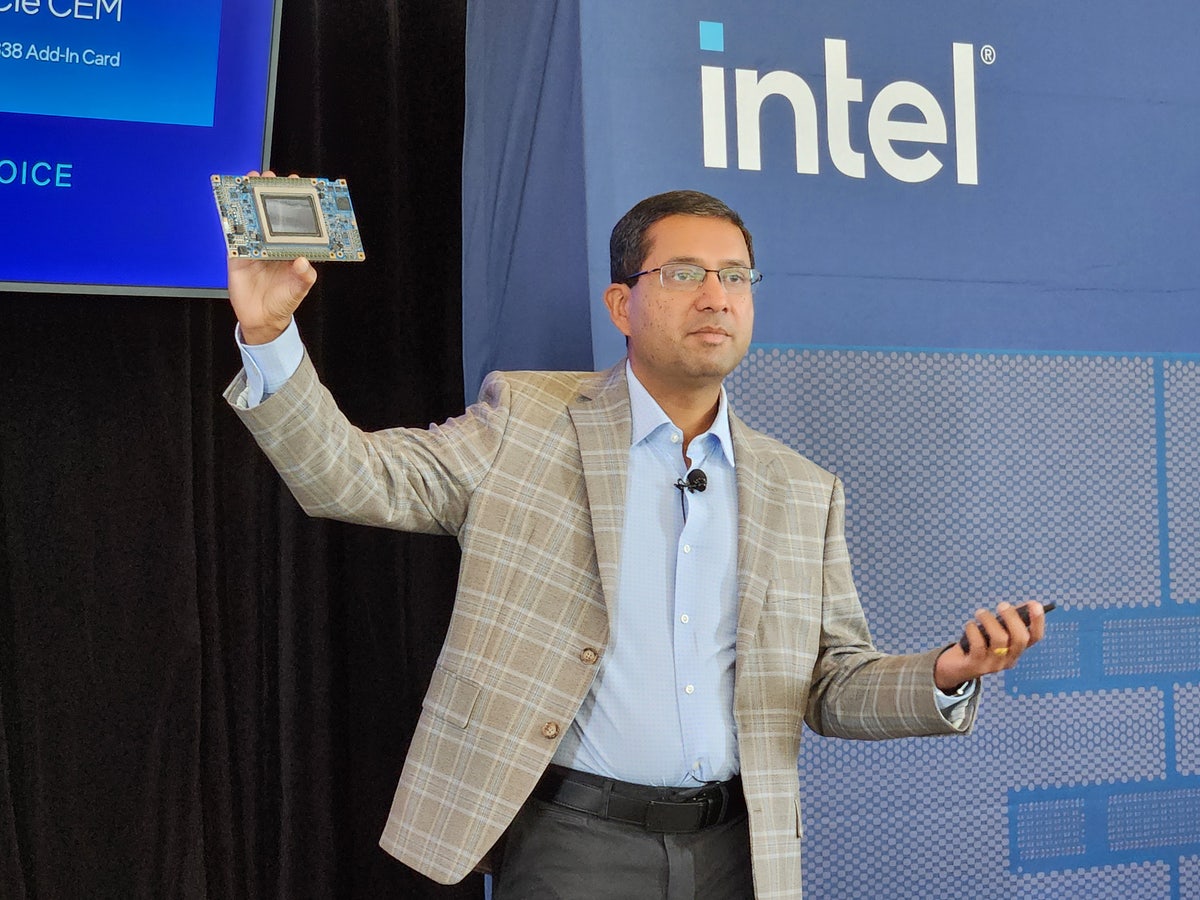

Saurabh Kulkarni (pictured), vice president of product management in Intel’s Data Center and AI Group, said customers and partners will have the choice to scale Gaudi 3 from one system with eight accelerator chips, all the way to a 1,024-node cluster with over 8,000 chips, with several configuration options in between, all meant for different levels of performance.

To enable the hardware ecosystem, Intel is replicating its Xeon playbook by providing OEMs with reference architectures and designs, which “can then be used as blueprints for our customers to replicate and build infrastructure in a modular fashion,” he said.

These reference architectures will be backed by a variety of open standards, ranging from Ethernet and PCIe for connectivity to DAOS for distributed storage and SYCL for programming, which Intel said helps prevent vendor lock-in.

When it comes to software, Intel executive Bill Pearson said the company’s open approach means that partners and customers can choose from a variety of tools from different vendors to address every software need for an AI system. He contrasted this with Nvidia’s approach, which has been to create many of its own tools that only work with Nvidia GPUs.

“Rather than us creating all the tools that a customer or developer might need, we rely on our ecosystem partners to do that. We work with them, and we help the customers then choose the one that makes sense for their particular enterprise,” said Pearson, who is vice president of software in the Data Center and AI Group.

A key aspect of this open ecosystem software approach is the Open Platform for Enterprise AI (OPEA), a group started earlier this year under the Linux Foundation that is meant to serve as the foundation for microservices that can be used for AI systems. Members of the group range from chip companies like AMD, Intel and Rivos, to a wide variety of software providers, including virtualization providers like VMware and Red Hat as well as AI and machine learning platforms such as Domino, Clarifai and Intel-backed Articul8.

“When we look at how to implement a solution leveraging those microservices, every component of the stack has multiple offers, and so you need to be very specific about what’s going to work best for you. Is there a preference that you have? Is it a purchasing agreement? Is it a technical preference? Is there a relationship preference?” said Pearson.

“And then customers can choose the pieces, the components, the ingredients that are going to make sense for their business. To me, that’s one of the best things about our open ecosystem, is that we don’t hand you the answer. Instead, we give you the tools to go and select the best answer,” he added.

Key to Intel’s software approach for AI systems is a focus on retrieval-augmented generation (RAG), which allows LLMs to perform queries against proprietary enterprise data without creating the need to fine-tune or re-train those models.

“This finally enables organizations to customize and launch GenAI applications more quickly and more cost effectively,” Pearson said.

To help customers set up RAG-based AI applications, Intel plans to introduce later this year Intel AI for Enterprise RAG, a catalog of solutions developed by Intel and third parties that is set to debut before the end of the year. These solutions address use cases ranging from code generation and code translation, to content summarization and question-and-answer.

Pearson said Intel is “uniquely positioned” to address challenges faced by businesses in deploying RAG-based AI infrastructure with technologies developed by Intel and partners, which start with validated servers equipped with Gaudi and Xeon chips from OEMs and includes software optimizations, vector databases and embedding models, management and orchestration software, OPEA microservices, and RAG software.

“All of this makes it easy for enterprise customers to implement solutions based on Intel AI for Enterprise RAG,” he said.

Channel Will Be ‘Key’ For Gaudi 3 Rollout

In an interview with CRN last week, Greg Ernst, corporate vice president and general manager of Intel’s Americas sales organization and global accounts, said channel partners will be critical to getting Gaudi 3-based systems in the hands of customers.

For Intel to get to this point, Ernst said the chipmaker needed Gaudi 3 to reach a broad range of support from server vendors that “partners like World Wide Technology can really rally around.” He added that Intel has “done a lot of learning with the partners of how to sell the product and implement product support.”

“Now we’re ready for scale, and the partners are going to be key for that,” he said.

Rohit Badlaney, general manager of IBM Cloud product and industry platforms, told CRN that the company’s “build” independent software vendor (ISV) partners, value-added distributors and global systems integrators are three major ways IBM plans to sell cloud services based on Gaudi 3, which will largely be focused around its watsonx AI platform.

“We’ve got a whole sales ecosystem team that’s going to focus on build ISVs, both embedding and building with our watsonx platform, the same kind of efforts going on now with our Red Hat developer stack,” he said at Intel’s press event last month.

Badlaney said IBM Cloud has tested Intel’s “price performance advantage” claims for Gaudi 3 and is impressed with what they have found.

“As we look at the capability in Gaudi 3, specifically for our watsonx data and AI platform, it really differentiated in our testing from a cost-performance perspective. So the first set of use cases that we’ll apply it to is inferencing around our own branded models and some of the other models that we see,” he said.

Vivek Mohindra, senior vice president of corporate strategy at Dell, said by adopting Gaudi 3 into its PowerEdge XE9680 portfolio, his company is giving partners and customers an alternative to systems with accelerator chips from Intel’s rivals. He added that Dell’s Omnia software for managing high-performance computing and AI workloads works well with the OPEA microservices, giving enterprises an “easy button” to deploy new infrastructure.

“It gives customers a choice as well, and then on software, with our Omnia stack being interoperable with [Intel’s] OPEA, that provides for an immense ability for the customers to adopt and scale it relatively easily,” he said at Intel’s press event.

Alexey Stolyar, CTO of Northbrook, Ill.-based systems integrator International Computer Concepts, told CRN that his company started taking high-level training courses around Gaudi 3 and that he can see the need for cost-effective AI systems enabled by such chips, mainly because of how much power it takes to train or fine-tune massive models.

“What you’re going to find is that a lot of the world is going to focus on smaller, more efficient, more precise models than these huge ones. The huge ones are good at general tasks, but they’re not good at very specific tasks. The enterprises are going to start developing either their own models or fine-tune specific open-source models, but they’re going to be smaller and they’re going to be more efficient,” he said.

Stolyar said while International Computer Concepts hasn’t started talking to customers proactively about Gaudi 3 systems, one customer has already approached his company developing a Gaudi 3 system for a turnkey appliance the customer plans to sell for specific workloads because of benchmark where the chip has shown to perform well.

However, the solution provider executive said he isn’t sure how big of an opportunity Gaudi 3 represents yet and added that Intel’s success will heavily rely on how easy Gaudi 3 systems are to use in relation to those powered by Nvidia chips and software.

“I think customers want alternatives. I think having good competition is good, but it’s not going to happen until that ease of use is there. Nvidia has been doing this for a while. They’ve been fine-tuning their software packages and so on for a long time in that ecosystem,” he said.

A senior leader at a solution provider told CRN that his company’s conversations with Intel representatives have given him the impression that the chipmaker isn’t seeking to take Nvidia head-on with Gaudi 3 but is instead hoping to win a “percentage” of the AI market.

“They’ve been talking about Gaudi 3 for a long time: ‘Hey, this is going to be the thing for us. We’re going to compete.’ But then I think they’re also sort of coming in with tempered expectations of like, ‘Hey, let’s compete in a percentage of this market. We’re not going to take on Nvidia, per se, head-to-head, but we can chew away at some of this and give customers options. Let’s pick out five customers and go talk to them,” said the executive, who asked to not be identified to speak frankly about his work with Intel.

The solution provider leader said he does think there could be a market for cost-effective AI systems like the ones that are powered by Gaudi 3 because he has heard from customers who are becoming more conscious about high AI infrastructure costs.

“In some ways, you’re conceding that somebody else has already won when you take that approach, but it’s also pretty logical to say, ‘Hey, if it does all these things, you would be a fool not to look at it, because it’ll save you money and power and everything else.’ But that’s not a take-over-the-world type of strategy,” he said.

Source link

lol

In presentations and in interviews with CRN, Intel executives elaborate on the chipmaker’s strategy to market its Gaudi 3 accelerator chips to businesses who need cost-effective AI systems backed by open ecosystems after CEO Pat Gelsinger admitted that Intel won’t be ‘competing anytime soon for high-end training’ against rivals like Nvidia. Intel said its strategy…

Recent Posts

- Arm To Seek Retrial In Qualcomm Case After Mixed Verdict

- Jury Sides With Qualcomm Over Arm In Case Related To Snapdragon X PC Chips

- Equinix Makes Dell AI Factory With Nvidia Available Through Partners

- AMD’s EPYC CPU Boss Seeks To Push Into SMB, Midmarket With Partners

- Fortinet Releases Security Updates for FortiManager | CISA