Nvidia Reveals 4-GPU GB200 NVL4 Superchip, Releases H200 NVL Module

by nlqip

At Supercomputing 2024, the AI computing giant shows off what is likely its biggest AI ‘chip’ yet—the four-GPU Grace Blackwell GB200 NVL4 Superchip—while it announces the general availability of its H200 NVL PCIe module for enterprise servers running AI workloads.

Nvidia is revealing what is likely its biggest AI “chip” yet—the four-GPU Grace Blackwell GB200 NVL4 Superchip—in another sign of how the company is stretching the traditional definition of semiconductor chips to fuel its AI computing ambitions.

Announced at the Supercomputing 2024 event on Monday, the product is a step up from Nvidia’s recently launched Grace Blackwell GB200 Superchip that was revealed in March as the company’s new flagship AI computing product. The AI computing giant also announced the general availability of its H200 NVL PCIe module, which will make the H200 GPU launched earlier this year more accessible for standard server platforms.

[Related: 8 Recent Nvidia Updates: Nutanix AI Deal, AI Data Center Guidelines And More]

The GB200 NVL4 Superchip is designed for “single server Blackwell solutions” running a mix of high-performance computing and AI workloads, said Dion Harris, director of accelerated computing at Nvidia, in a briefing last week with journalists.

These server solutions include Hewlett Packard Enterprise’s Cray Supercomputing EX154n Accelerator Blade, which was announced last week and packs up to 224 B200 GPUs. The Cray blade server is expected to become available by the end of 2025, per HPE.

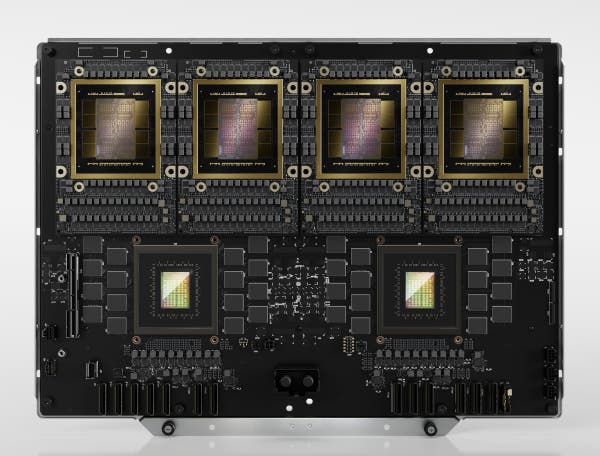

Whereas the GB200 Superchip resembles a slick black motherboard that connects one Arm-based Grace GPU with two B200 GPUs based on Nvidia’s new Blackwell architecture, the NVL4 product seems to roughly double the Superchip’s surface area with two Grace CPUs and four B200 GPUs on a larger board, according to an image shared by Nvidia.

Like the standard GB200 Superchip, the GB200 NVL4 uses the fifth generation of Nvidia’s NVLink chip-to-chip interconnect to enable high-speed communication between the CPUs and GPUs. The company has previously said that this generation of NVLink enables bidirectional throughput per GPU to reach 1.8 TB/s.

Nvidia said the GB200 NVL4 Superchip features 1.3 TB of coherent memory that is shared across all four B200 GPUs using NVLink.

To demonstrate the computing horsepower of the GB200 NVL4, the company compared it to the previously released GH200 NVL4 Superchip, which was originally introduced as the Quad GH200 a year ago and consists of four Grace Hopper GH200 Superchips. The GH200 Superchip contains one Grace CPU and one Hopper H200 GPU.

Compared to the GH200 NVL4, the GB200 NVL4 is 2.2 times faster for a simulation workload using MILC code, 80 percent faster for training the 37-million-parameter GraphCast weather forecasting AI model and 80 percent faster for inference on the 7-billion-parameter Llama 2 model using 16-bit floating-point precision.

The company did not provide any further specifications or performance claims.

In the briefing with journalists, Harris said Nvidia’s partners are expected to introduce new Blackwell-based solutions at Supercomputing 2024 this week.

“The rollout of Blackwell is progressing smoothly thanks to the reference architecture, enabling partners to quickly bring products to market while adding their own customizations,” he said.

Nvidia Releases H200 NVL PCIe Module

In addition to revealing the GB200 NVL4 Superchip, Nvidia announced that its previously announced H200 NVL PCIe card will become available in partner systems next month.

The NVL4 module contains Nvidia’s H200 GPU that launched earlier this year in the SXM form factor for Nvidia’s DGX system as well as HGX systems from server vendors. The H200 is the successor to the company’s H100 that uses the same Hopper architecture and helped make Nvidia into the dominant provider of AI chips for generative AI workloads.

What makes it different from a standard PCIe design is that the H200 NVL consists of either two or four PCIe cards that are connected together using Nvidia’s NVLink interconnect bridge, which enables a bidirectional throughput per GPU of 900 GB/s. The product’s predecessor, the H100 NVL, only connected two cards via NVLink.

It’s also air-cooled in contrast to the H200 SXM coming with options for liquid cooling.

The dual-slot PCIe form factor makes the H200 NVL “ideal for data centers with lower power, air-cooled enterprise rack designs with flexible configurations to deliver acceleration for every AI and HPC workload, regardless of size,” according to Harris.

“Companies can use their existing racks and select the number of GPUs that best fit their needs, from one, two, four or even eight GPUs with NVLink domain scaling up to four,” he said. “Enterprises can use H200 NVL to accelerate AI and HPC applications while also improving energy efficiency through reduced power consumption.”

Like its SXM cousin, the H200 NVL comes with 141GB of high-bandwidth memory and 4.8 TB/s of memory bandwidth in contrast to the H100 NVL’s 94-GB and 3.9-TB/s capacities, but its max thermal design power only goes up to 600 watts instead of the 700-watt maximum of the H200 SXM version, according to the company.

This results in the H200 NVL having slightly less horsepower than the SXM module. For instance, the H200 NVL can only reach 30 teraflops in 64-bit floating point (FP64) and 3,341 teraflops in 8-bit integer (INT8) performance whereas the SXM version can achieve 34 teraflops in FP64 and 3,958 teraflops in INT8 performance. (A teraflop is a unit of measurement for one trillion floating-point operations per second.)

When it comes to running inference on a 70-billion-parameter Llama 3 model, the H200 NVL is 70 percent faster than the H100 NVL, according to Nvidia. As for HPC workloads, the company said the H200 NVL is 30 percent faster for reverse time migration modeling.

The H200 NVL comes with a five-year subscription to the Nvidia AI Enterprise software platform, which comes with Nvidia NIM microservices for accelerating AI development.

Source link

lol

At Supercomputing 2024, the AI computing giant shows off what is likely its biggest AI ‘chip’ yet—the four-GPU Grace Blackwell GB200 NVL4 Superchip—while it announces the general availability of its H200 NVL PCIe module for enterprise servers running AI workloads. Nvidia is revealing what is likely its biggest AI “chip” yet—the four-GPU Grace Blackwell GB200…

Recent Posts

- Palo Alto Networks patches two firewall zero-days used in attacks

- Vulnerability Summary for the Week of November 11, 2024 | CISA

- US space tech giant Maxar discloses employee data breach

- CISA Adds Two Known Exploited Vulnerabilities to Catalog | CISA

- CISA Adds Three Known Exploited Vulnerabilities to Catalog | CISA