5 Cool AI And HPC Servers With AMD Instinct MI300 Chips

by nlqip

CRN rounds up five cool AI and high-performance computing servers from Dell Technologies, Lenovo, Supermicro and Gigabyte that use AMD’s Instinct MI300 chips, which launched a few months ago to challenge Nvidia’s dominance in the AI computing space.

AMD is making its biggest challenge yet to Nvidia’s dominance in the AI computing space with its Instinct MI300 accelerator chips. A few months after launch, multiple MI300 servers are now available or set to release soon as alternatives to systems with Nvidia’s popular chips.

During the chip designer’s first-quarter earnings call Tuesday, AMD Chair and CEO Lisa Su said the MI300 has become the “fastest-ramping product” in its history after generating more than $1 billion in “total sales in less than two quarters.”

[Related: Intel’s Gaudi 3 AI Chip Targets Nvidia H100, H200; Scales To 8,000-Chip Clusters]

As a result, AMD now expects the MI300 to generate $4 billion in revenue this year, $500 million higher than a forecast the company gave in January.

“What we see now is just greater visibility to both current customers as well as new customers committing to MI300,” Su said.

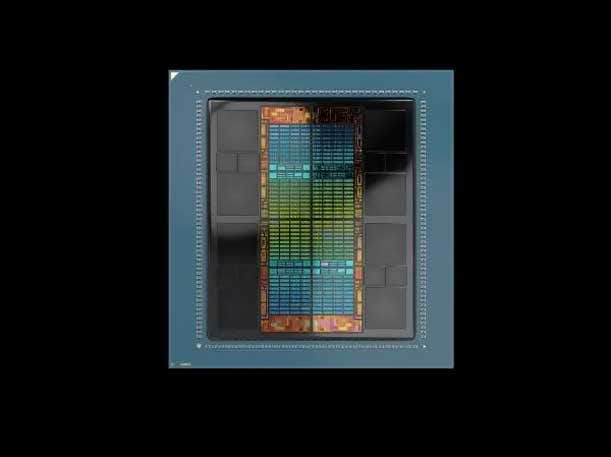

The MI300 series currently consists of two products: the MI300X GPU, which comes with 192 GB of HBM3 high-bandwidth memory, and the MI300A APU, which combines Zen 4 CPU cores and CDNA 3 GPU cores with 128 GB of HBM3 memory on the same die.

The chips are in systems available now or coming soon from major OEMs, including Dell Technologies, Lenovo and Supermicro and Gigabyte.

The Santa Clara, Calif.-based company has pitched the MI300X as a strong competitor to Nvidia’s popular and powerful H100 data center GPU for AI training and inference. For instance, the company said its MI300X platform, which combines eight MI300X GPUs, offers 60 percent higher inference throughput for the 176-billion-parameter Bloom model and 40 percent lower chat latency for the 70B Llama 2 model compared with Nvidia’s eight-GPU H100 GHX platform.

The MI300A, on the other hand, is more aimed at the convergence of high-performance computing and AI workloads. For 64-bit floating bit calculations, AMD said the MI300A is 80 percent faster than the H100. Compared with Nvidia’s GH200 Grace Hopper Superchip, which combines its Grace GPU with an H200 GPU, the MI300A offers two times greater HPC performance, according to AMD.

For all the advantages AMD is finding with the MI300 chips, the company has to contend with the fact that Nvidia is operating on an accelerated chip road map and plans to release successors to the H100, H200 and GH200 processors later this year.

In the recent earnings call, Su said AMD’s strategy is not about one product but a “multiyear, multigenerational road map” and teased that it will share details in the “coming months” about new accelerator chips arriving “later this year into 2025.”

“We’re very confident in our ability to continue to be very competitive. Frankly, I think we’re going to get more competitive,” she said.

What follows are five cool AI and HPC servers from Dell, Lenovo, Supermicro and Gigabyte that use either AMD’s Instinct MI300X GPUs or its Instinct MI300A APUs.

Dell PowerEdge XE9680

Dell’s PowerEdge XE9680 is an air-cooled 6U server that is meant to power the most complex generative AI, machine learning, deep learning and high-performance computing workloads.

The server connects eight AMD Instinct MI300X GPUs using the AMD Infinity Fabric, giving the system 1.5 TB of HBM3 high-bandwidth memory and over 21 petaflops of 16-bit floating point (FP16) performance. The system supports a maximum of 4 TB of DDR5 memory across 32 DIMM slots, and it comes with up to 10 PCIe Gen 5 expansion slots.

With availability expected this summer, the PowerEdge XE9680’s host processors are two 4th Generation Intel Xeon Scalable CPUs, each of which provides up to 56 cores.

The server supports up to eight 2.5-inch NVMe/SAS/SATA SSD drives for a total of 122.88 TB and up to 16 E3.S NVMe direct drives for a total of 122.88 TB.

The PowerEdge XE9680 comes with the Dell OpenManage systems management software portfolio as well as security features like silicon-based root of trust.

Lenovo ThinkSystem SR685a V3

Lenovo’s recently unveiled ThinkSystem SR685a V3 is an air-cooled 8U server that is designed to tackle the most demanding AI workloads such as large language models.

Available now, the server connects eight AMD Instinct MI300X GPUs using the AMD Infinity Fabric, which gives it 1.5 TB of HBM3 high-bandwidth memory capacity and up to 1 TBps of peak aggregate I/O bandwidth. It also supports up to 3 TB of DDR5 memory across 24 DIMM slots and comes with up to 10 PCIe Gen 5 slots for attaching expansion cards.

The ThinkSystem SR685a V3 is also compatible with Nvidia’s H100 and H200 GPUs as well as the chip designer’s upcoming B100 GPUs.

The server’s host processors are two 4th Generation AMD EPYC CPUs, which can be upgraded to a future generation of the server chips, according to the OEM.

It supports up to 16 2.5-inch hot-swap NVMe SSDs. It also comes with two M.2 boot drives.

Lenovo said its air-cooling design provides “substantial thermal headroom,” allowing the GPUs and CPUs to provide sustained maximum performance.

The server comes with Lenovo’s XClarity systems management software.

Supermicro AS-8125GS-TNMR2

Supermicro’s AS-8125GS-TNMR2 is an air-cooled 8U server that is built to eliminate AI training bottlenecks for large language models and serve as part of massive training clusters.

Available now, the server connects eight AMD Instinct MI300X GPUs using the AMD Infinity Fabric, which enables it to provide a 1.5-TB pool of HBM3 high-bandwidth memory. This is in addition to the 6 TB of maximum DDR5 memory supported across 24 DIMM slots.

The AS-8125GS-TNMR2 comes with eight PCIe Gen 5 low-profile expansion slots that enable direct connectivity between eight 400G networking cards and the eight GPUs to support massive clusters. It also features two PCIe Gen 5 full-height, full-length expansion slots.

The host processors are two 4th Generation AMD EPYC CPUs, each of which sport up to 128 cores.

It comes with 12 PCIe Gen 5 NVMe U.2 drives with an option for four additional drives, two hot-swap 2.5-inch SATA drives and two M.2 NVMe boot drives.

Other features include a built-in server management tool, Supermicro SuperCloud Composer, Supermicro Server Manager and hardware root of trust.

Gigabyte G593-ZX1

Gigabyte’s G593-ZX1 is an air-cooled 5U server that is designed for AI training and inference, particularly when it comes to large language models and other kinds of massive AI models.

The server connects eight AMD Instinct MI300X GPUs using the AMD Infinity Fabric, which allows it to provide 1.5 TB of HBM3 high-bandwidth memory and 42.4 TBps of peak theoretical aggregate memory bandwidth. It also supports 24 DIMMs of DDR5 memory and comes with 12 PCIe Gen 5 expansion slots for GPUs, networking cards or storage devices.

The G593-ZX1’s host processors are two 4th Generation AMD EPYC CPUs.

It supports eight 2.5-inch NVMe/SATA/SAS-4 hot-swap drives.

The server’s features include a tool-less design for drive bays, an optional TPM 2.0 module, Smart Ride Through, Smart Crises Management and Protection, and a dual ROM architecture.

Availability is expected in the first half of the year.

Supermicro AS-2145GH-TNMR

Supermicro’s AS-2145GH-TNMR is a liquid-cooled 2U server that is targeted at accelerated high-performance computing workloads.

Available now, the server comes with four AMD Instinct MI300A accelerators, each of which combines Zen 4 CPU and CDNA 3 GPU cores with HBM3 memory on the same chip package to break down traditional bottlenecks in CPU-GPU communication. With 128 GB of HBM3 high-bandwidth memory on each chip, the system provides a total of 512 GB of memory.

The AS-2145GH-TNMR supports the option for eight 2.5-inch U.S NVMe hot-swap drives. It also comes with two M.2 NVMe boot drives.

Supermicro said the server’s direct-to-chip custom liquid cooling technology enables data center operators to reduce total cost of ownership by more than 51 percent in comparison to an air-cooled solution. It also reduces the number of fans by 70 percent.

The AS-2145GH-TNMR comes with two compact PCIe Gen 5 AIOM slots and eight PCIe Gen 5 x 16 slots to support 400G Ethernet InfiniBand networks for supercomputing clusters.

Other features include a built-in server management tool, Supermicro SuperCloud Composer, Supermicro Server Manager and SuperDoctor 5.

Source link

lol

CRN rounds up five cool AI and high-performance computing servers from Dell Technologies, Lenovo, Supermicro and Gigabyte that use AMD’s Instinct MI300 chips, which launched a few months ago to challenge Nvidia’s dominance in the AI computing space. AMD is making its biggest challenge yet to Nvidia’s dominance in the AI computing space with its…

Recent Posts

- Arm To Seek Retrial In Qualcomm Case After Mixed Verdict

- Jury Sides With Qualcomm Over Arm In Case Related To Snapdragon X PC Chips

- Equinix Makes Dell AI Factory With Nvidia Available Through Partners

- AMD’s EPYC CPU Boss Seeks To Push Into SMB, Midmarket With Partners

- Fortinet Releases Security Updates for FortiManager | CISA