12 Big Nvidia, Intel And AMD Announcements At Computex 2024

by nlqip

With Nvidia, Intel and AMD signaling that the semiconductor industry is entering a new era of hyperdrive, Computex 2024 was an event not to miss for channel partners who are building businesses around next-generation PCs and data centers, especially when it comes to AI.

The future of AI computing was on full display at this year’s Computex event in Taiwan, with Nvidia, Intel and AMD all making announcements for new chips, products and services that could change the way AI applications are developed, deployed and processed.

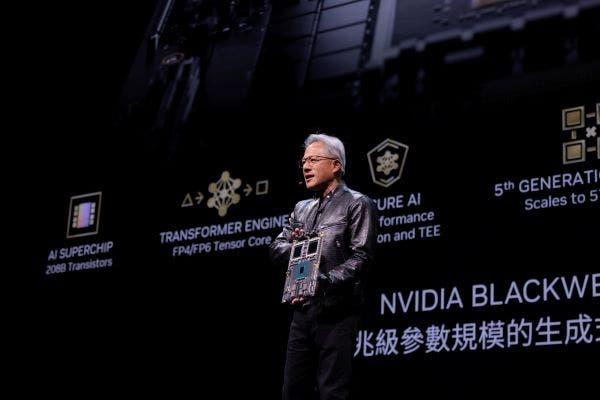

After revealing its next-generation Blackwell data center GPU architecture in March, Nvidia CEO Jensen Huang on Sunday attracted another arena full of attendees on the island nation to announce an expanded road map full of new GPUs, CPUs and other kinds of chips that will power future data centers.

[Related: Analysis: How Nvidia Surpassed Intel In Annual Revenue And Won The AI Crown]

The AI chip giant made several other announcements, including the expansion of its MGX modular designs for Blackwell GPUs, the launch of its NIM inference microservices, and its plan to boost the development of AI applications for PCs running on its RTX GPUs.

AMD CEO Lisa Su came to Computex armed with plenty of significant announcements too, revealing on Monday an expanded data center accelerator chip road map that starts with the 288-GB Instinct MI325X GPU coming later this year. She also disclosed that AMD plans to soon release the Ryzen AI 300 chips for AI-enabled laptops as well the Ryzen 9000 desktop series and teased the 192-core EPYC “Turin” processors coming later this year.

Pat Gelsinger, Intel’s CEO, followed on Tuesday with announcements set to shake up the PC and data center markets, ranging from the launch of the new Xeon 6 E-core CPUs to the reveal of the upcoming Lunar Lake processors for AI-enabled PCs. He also highlighted ecosystem momentum and platform pricing for Intel’s upcoming Gaudi 3 AI chip.

With all three companies signaling that the semiconductor industry is entering a new era of hyperdrive, Computex 2024 was an event not to miss for channel partners who are building businesses around next-generation PCs and data centers, especially when it comes to AI.

What follows are 12 big announcements made by Nvidia, Intel and AMD at Computex 2024.

Nvidia Reveals Blackwell GPU Successors In Expanded Data Center Road Map

Nvidia revealed that it plans to release successors to its upcoming Blackwell GPUs in the next two years and launch a second-generation CPU in 2026.

The Santa Clara, Calif.-based company made the disclosures in an expanded data center road map at Computex 2024 in Taiwan Sunday, where it provided basic details for next-generation GPUs, CPUs, network switch chips and network interface cards.

The plan to release a new data center GPU architecture every year is part of a one-year release cadence Nvidia announced last year, representing an acceleration of the company’s previous strategy of releasing new GPUs roughly every two years.

“Our basic philosophy is very simple: build the entire data center scale, disaggregate it and sell it to you in parts on a one-year rhythm, and we push everything to the technology limits,” Nvidia CEO Jensen Huang said in his Computex keynote on Sunday.

The expanded road map is coming less than two months after Nvidia revealed its next-generation Blackwell data center GPU architecture, which is expected to debut later this year. At its GTC event in March, the company said Blackwell will enable up to 30 times greater inference performance and consume 25 times less energy for massive AI models compared to the Hopper architecture, which debuted in 2022 with the H100 GPU.

In the road map revealed by Huang during his Computex keynote, the company outlined a plan to follow up Blackwell in 2025 with an updated architecture called Blackwell Ultra, which the CEO said will be “pushed to the limits.”

In the same time frame, the company is expected to release an updated version of its Spectrum-X800 Ethernet Switch, called the Spectrum Ultra X800.

Then in 2026, Nvidia plans to debut an all-new GPU architecture called Rubin, which will use HBM4 memory. This will coincide with several other new chips, including a follow-up to Nvidia’s Arm-based Grace CPU called Vera.

The company also plans to release in 2026 the NVLink 6 Switch, which will double chip-to-chip bandwidth to 3600 GBps; the CX9 SuperNIC, which will be capable of 1600 GBps; and the X1600 generation of InfiniBand and Ethernet switches.

“All of these chips that I’m showing you here are all in full development, 100 percent of them, and the rhythm is one year at the limits of technology, all 100 percent architecturally compatible,” Huang said.

Nvidia Enables Modular Blackwell Server Designs With MGX

Nvidia announced that it’s enabling a wide range of server designs for its Blackwell GPUs through the company’s MGX modular reference design platform.

The company said MGX enables server vendors to “quickly and cost-effectively build more than 100 system design configurations,” and they get to choose which GPU, CPU and data processing unit (DPU) they want to use.

Among the building blocks available to server vendors is the newly revealed GB200 NVL2, which is a single-node server design containing two Blackwell GPUs and two Grace CPUs that’s intended to fit into existing data centers.

The MGX platform also supports other Blackwell-based products such as the B100 GPU, B200 GPU, GB200 Grace Blackwell Superchip and GB200 NVL72.

In addition, the platform will support Intel’s upcoming Xeon 6 P-core processors and AMD’s upcoming EPYC “Turin” processors. Nvidia said the two companies plan to, “for the first time, their own CPU host processor module designs.”

AMD Reveals Zen 5-Based Ryzen 9000 Desktop CPUs

AMD revealed its next-generation Ryzen 9000 desktop processors, saying they will use the company’s new Zen 5 architecture and start shipping in July.

At Computex 2024 Monday, the Santa Clara, Calif.-based company called the lineup’s flagship chip, the 16-core Ryzen 9 9950X, the “fastest consumer desktop processor” due to its ability to deliver “exceptional single-threaded and multi-threaded performance.”

AMD also announced two additional models to its Ryzen 5000 desktop series, extending the longevity of the processor family’s AM4 platform that debuted in 2016.

The Ryzen 9000 series features up to 16 cores, 32 threads, a maximum boost frequency of 5.7GHz, a base frequency of 4.4GHz, a total cache of 80 MB and a thermal design power of 170 watts. All processors support PCIe Gen 5, DDR5 memory, USB4 and Wi-Fi 7.

They use AMD’s Zen 5 architecture, which provides a roughly 16 percent uplift in instructions per second for PCs, according to the company.

AMD said the Ryzen 9000 processors are faster than Intel’s 14th-generation Core CPUs that launched last year across gaming, productivity and content creation workloads.

Compared to Intel’s 24-core Core i9-14900K, the 16-core Ryzen 9 9950X is 7 percent faster on the Procyon Office benchmark, 10 percent faster on the Puget Photoshop benchmark, 21 percent faster on the Cinebench R24 nT benchmark, 55 percent faster on Handbrake and 56 percent faster on Blender, according to AMD.

The company also claimed that the Ryzen 9 9950X is 20 percent faster for running large language models, particularly Mistral, than the Core i9-14900K.

In gaming, AMD said the Ryzen 9 9950X is anywhere from 4 percent faster (for Borderland 3s to 23 percent faster (for Horizon Zero Down) compared to the Core i9-14900K.

The Ryzen 9000 processors are compatible with the AM5 platform, including the new X870E and X870 chipsets, which enable the latest in I/O, memory and connectivity.

The new chipsets support up to 44 PCIe lanes and direct-to-processor PCIe 5.0 NVMe connectivity. The X870E has 24 PCIe 5.0 lanes, 16 of which are dedicated to graphics.

As for the expanded Ryzen 500 series, the two new processors feature up to 16 cores, a maximum boost frequency of 4.8GHz, a base frequency of 3.3GHz, a total cache of 72 MB and a thermal design power of 105 watts.

AMD Reveals 288-GB Instinct MI325X, New Yearly AI Chip Cadence

AMD announced that plans to release a new Instinct data center GPU later this year with significantly greater high-bandwidth memory than its MI300X chip or Nvidia’s H200, enabling servers to handle larger generative AI models than before.

At Computex 2024 in Taiwan Monday, the Santa Clara, Calif.-based company was expected to reveal the Instinct MI325X GPU. Set to arrive in the fourth quarter, it will provide a substantial upgrade in memory capacity and bandwidth over the MI300X, which became one of AMD’s “fastest-ramping” products to date after launching in December.

[Read More: AMD Targets Nvidia With 288-GB Instinct MI325X GPU Coming This Year]

Whereas the MI300X sports 192 GB of HBM3 high-bandwidth memory and a memory bandwidth of 5.3 TBps, the MI325X features up to 288 GB of HBM3e and 6 TBps of bandwidth, according to AMD. Eight of these GPUs will fit into what’s called the Instinct MI325X Platform, which has the same architecture as the MI300X platform that goes into servers designed by OEMs.

The chip designer said the MI325X has multiple advantages over Nvidia’s H200, which was expected to start shipping in the second quarter as the successor to the H100.

For one, the MI325X’s 288-GB capacity is more than double the H200’s 141 GB of HBM3e, and its memory bandwidth is 30 percent faster than the H200’s 4.8 TBps, according to AMD.

The company said the MI325X’s peak theoretical throughput for 8-bit floating point (FP8) and 16-bit floating point (FP16) are 2.6 petaflops and 1.3 petaflops, respectively. These figures are 30 percent higher than what the H200 can accomplish, AMD said.

In addition, the MI325X enables servers to handle a 1-trillion-parameter model in its entirety, double the size of what’s possible with the H200, according to the company.

AMD announced the details as part of a newly disclosed plan to release a new data center GPU every year starting with the MI325X, which, like the MI300X, uses the company’s CDNA 3 architecture that is expressly designed for data center applications.

In an extended road map, AMD said it will release the MI325X later this year. It will then debut in 2025 the Instinct MI350 series that will use its CDNA 4 architecture to provide increased compute performance and “memory leadership,” according to AMD. The next generation, the Instinct MI400 series, will use a future iteration of CDNA architecture and arrive in 2026.

AMD Reveals Ryzen AI 300 Chips For Copilot+ PCs

AMD revealed its third generation of Ryzen processors for AI PCs with a new brand name, claiming that they beat the latest chips from Intel, Apple and Qualcomm.

The Santa Clara, Calif.-based company showed off the new processors, called the Ryzen AI 300 series, at Computex 2024 in Taiwan on Monday and called them the “world’s best” for PCs that are part of Microsoft’s new Copilot+ PC program.

[Read More: AMD’s Ryzen AI 300 Chips For Copilot+ PCs Take Aim At Qualcomm, Apple And Intel]

The announcement was made roughly two weeks after Microsoft revealed the first wave of Copilot+ PCs, which come with exclusive AI features like Recall for Windows 11 that take advantage of the device’s processor, namely its neural processing unit (NPU).

Qualcomm beat AMD and Intel to become the first chip company to power Copilot+ PCs with its Snapdragon X processors, saying that they will deliver “industry-leading performance” when the 20-plus laptops powered by its chips land in June.

Now AMD is hitting back against Qualcomm and taking digs at Intel and Apple too with claims that the Ryzen AI 300 series—the successor to the Ryzen 8040 series that launched in December—can outperform its rivals’ chips in NPU performance, large language model performance, productivity, graphics, multitasking, video editing and 3-D rendering.

The company said the Ryzen AI 300 processors will go into more than 100 PCs, including laptops, starting in July, from Dell Technologies, HP Inc., Lenovo, MSI, Acer and Asus.

At launch, the lineup will consist of the 12-core Ryzen AI 9 HX 370, which has a maximum boost frequency of 5.1GHz, and the 10-core Ryzen AI 9 HX 365, which boosts to 5GHz. Both have a base thermal design power of 28 watts, which can be configured down to 15 watts for more efficient designs and up to 54 watts for higher-performance designs by OEMs.

The processors use AMD’s Zen 5 CPU architecture, RDNA 3.5 GPU architecture and XDNA 2 NPU architecture, the latter of which is capable of 50 tera operations per second (TOPs).

Intel Launches Xeon 6 E-Core CPUs With Server Consolidation Push

Intel used Computex 2024 to mark the launch of its Xeon 6 E-core processors and said the lineup has “tremendous density advantages” that will enable data centers to significantly consolidate racks running on older CPUs.

The processors, code-named Sierra Forest, are the first under an updated brand for Xeon, which is ditching the generation nomenclature and moving the generation number to the end of the name.

[Read More: 4 Big Points About Intel’s New, Efficiency-Focused Xeon 6 E-Core CPUs]

They are also part of a new bifurcated server processor strategy, where Intel will release two types of Xeon processors from now on: those with performance cores (P-cores) to optimize for speed and those with efficient cores (E-cores) to optimize for efficiency.

While the company did not disclose OEMs or cloud service providers supporting Xeon 6 processors, some vendors revealed plans this week to ship new servers with the chips. These vendors included Supermicro, Lenovo, Tyan, QCT and Gigabyte.

Intel is phasing the rollout of Xeon 6 processors over the next several months, and they are divided into different categories based on performance and other features. These categories consist of the Xeon 6900, 6700, 6500 and 6300 series as well as Xeon 6 system-on-chip series. Most categories include processors with P-cores and E-cores.

The Xeon 6700E series, with the “E” suffix indicating its use of E-cores, is launching this week for servers with one or two sockets. They feature up to 144 E-cores, a maximum thermal design power of 350 watts, eight channels of DDR5 memory with up to 6400 megatransfers per second, up to 88 PCIe 5.0 or CXL 2.0 lanes and four UPI 2.0 links with up to 24 gigatransfers per second.

Intel said the Xeon 6 E-core processors provide major efficiency gains over previous generations, especially for CPUs that are more than a couple years old.

As a result, the processors can significantly reduce the number of servers with older processors needed to provide the same level of performance. This, in turn, will reduce the space and power needed for such server clusters, according to the company.

CRN published an in-depth article on Wednesday on the most important things to know about Xeon 6 E-core processors, including software support, platform enhancements, specs, how the chips compare to previous generations and AMD’s EPYC CPUs, how they can reduce energy consumption, and Intel’s broader rollout plans for Xeon 6.

Intel Reveals 8-Chip Gaudi 3 Platform Price

Intel revealed the list price of the star server platform for its upcoming Gaudi 3 AI chip, upending a norm of secrecy in the data center accelerator chip space.

On Tuesday at Computex 2024 in Taiwan, the semiconductor giant said its universal baseboard with eight Gaudi 3 OAM modules will have a list price of $125,000 for server vendors, including Dell Technologies, Hewlett Packard Enterprise, Lenovo and Supermicro, who plan to support the chip when it launches in the third quarter.

[Read More: Intel Reveals 8-Chip Gaudi 3 Platform Price, Upending Industry Norm Of Secrecy]

The $125,000 price puts the Gaudi 3 platform at two-thirds the estimated cost of Nvidia’s eight-GPU HGX H100 platform, which serves as the basis for the rival’s DGX H100 system and certain third-party servers, Anil Nanduri, vice president and head of Intel’s AI acceleration office told CRN in an interview last Friday.

Combining the price with its performance capabilities, the Gaudi 3 chip provides 2.3 times greater performance-per-dollar than Nvidia’s H100 for inference throughput and 90 percent better performance-per-dollar for training throughput, according to testing by Intel.

Nanduri said Intel decided to disclose the list prices of its Gaudi 3 and Gaudi 2 platforms because it will help customers, particularly startups who typically don’t have visibility into behind-the-scenes pricing from vendors, plan investments in AI computing more easily.

“A lot of the innovation is happening from startups. They’re not familiar with the ecosystems [and] how the purchasing, procurement [and request for quotes] work, so it helps them get a framing of what to expect,” he said.

Intel Reveals Lunar Lake Chips For Next-Gen AI PCs

Intel revealed that its next-generation Lunar Lake system-on-chips for AI-enabled laptops will begin shipping in the third quarter.

The semiconductor giant said the processors will consume 40 percent less power and enable three times greater AI performance over the first generation of Core Ultra chips, formerly code-named Meteor Lake. The upcoming chips are expected to power more than 80 AI PCs from OEMs, according to the company.

Continuing Intel’s growing embrace of the chiplet design methodology, Lunar Lake consists of a new compute tile featuring new performance core and efficient core architectures, a fourth-generation neural processing unit, an all-new GPU design code-named Battlemage and an image processing unit.

The system-on-chips also come with built-in memory to reduce latency and system power consumption and what Intel is calling the platform controller tile, which includes an integrated security solution as well as connectivity support for Wi-Fi 7, Bluetooth 5.4, PCIe Gen 5 and Gen 4, Thunderbolt 4.

Lunar Lake’s NPU is capable of 48 tera operations per second (TOPS), which is becoming the industry’s standard way of measuring power-efficient AI performance. This is three times higher than what Meteor Lake can achieve, according to Intel.

By contrast, AMD upcoming Ryzen AI 300 series can reach 50 TOPS, Qualcomm’s new Snapdragon X processors can reach 45 TOPS and Apple’s M4 can reach 38 TOPS when it comes to NPU performance, according to statements made by the companies.

The Battlemage GPU within Lunar Lake processors is based on Intel’s new Xe2 GPU cores, which improve gaming and graphics performance by 50 percent over the GPU cores within the Core Ultra processors. The GPU also features new Xe Matrix Extension arrays, which enables the GPU to act as a second AI accelerator capable of 67 TOPS.

These enhancements, combined with a new integrated controller for power delivery, will enable “up to 60 percent better battery life in real-life usages” when compared to the first generation of Core Ultra processors, according to Intel.

AMD Teases 192-Core EPYC, Positions Radeon GPUs For AI

AMD teased that its fifth-generation EPYC processors, code-named Turin, will arrive in the second half of this year and feature 192 cores based on the Zen 5 architecture.

The company also announced the Versal AI Edge Series 2 platform, which “brings together FPGA programmable logic for real-time pre-processing, next-gen AI Engines powered by XDNA technology for efficient AI inference, and embedded CPUs for post-processing to deliver the highest performing single chip adaptive solution for edge AI.”

In addition, AMD said it plans to release later this month the Radeon Pro W7900 dual-slot workstation graphics card, which is “optimized to deliver scalable AI performance for platforms supporting multiple GPUs.”

It said this product and other Radeon GPUS will be supported by its ROCm 6.1 software stack, due later this month, to “make AI development and deployment with AMD Radeon desktop GPUs more compatible, accessible and scalable.”

Intel Teases Panther Lake For AI-Enabled Laptops

Intel teased the follow-up to its upcoming Lunar Lake processors for laptops and said the lineup will arrive in 2025. Code-named “Panther Lake,” the processors will “accelerate and scale our position” by taking advantage of the Intel 18A process node, CEO Pat Gelsinger said in his Computex keynote on Tuesday.

Gelsinger said the semiconductor giant expects to power on the first Panther Lake chips coming out of its fabrication plants next week.

Intel 18A is the fifth node in Gelsinger’s plan to accelerate the company’s manufacturing process road map. As Gelsinger stated months after he became CEO in 2021, the company is hinging on Intel 18A to allow it to surpass Asian chip foundry rivals TSMC and Samsung in advanced chip manufacturing capabilities.

“I am so excited about this product because this represents the culmination of so much of what we have been working on since I’ve been back at the company,” he said.

Nvidia Reveals Plan To Boost RTX AI PC Development

Nvidia revealed a plan to enable developers to build AI applications for PCs running on its RTX GPUs with the new Nvidia RTX AI Toolkit.

Available later this month, the toolkit consists of tools and software development kits that allow developers to customize, optimize and deploy generative AI models on RTX AI PCs, a term the company started using for RTX-powered computers in January.

The toolkit includes the previously announced Nvidia AI Workbench, a free platform for creating, testing and customizing pretrained generative AI models.

It also includes the new Nvidia AI Inference Manager software development kit, which “enables an app to run AI locally or in the cloud, depending on the user’s system configuration or even the current workload,” according to the company.

Beyond RTX AI Toolkit, Nvidia said its working with Microsoft to give developers easy API access to “GPU-accelerated small language models that enable retrieval-augmented generation capabilities that run on-device as part of Windows Copilot Runtime.”

The company also announced generative AI software technologies for gaming. These include Project G-Assist, an AI assistant that can help answer questions within a game, and the ACE NIM microservice, which enables the development of digital humans for games.

Nvidia Makes Inference Microservices Generally Available

Nvidia marked the launch of its inference microservices, which are meant to help speed up the development of generative AI applications for data centers and PCs.

Known officially as Nvidia NIM, the microservices consist of AI models served in optimized containers that developers can integrate within their applications. These containers can include Nvidia software components such as Nvidia Triton Inference Server and Nvidia TensorRT-LLM to optimize inference workloads on its GPUs.

The microservices include more than 40 AI models developed by Nvidia and other companies, such as Databricks DBRX, Google Gemma, Meta Llama 3, Microsoft Phi-3, Mistral Large, Mixtral 8x22B and Snowflake Arctic.

Nvidia said nearly 200 technology partners, including Cloudera, Cohesity and NetAPp, “are integrating NIM into their platforms to speed generative AI deployments for domain-specific applications.” These apps include things such as copilots, code assistants and digital human avatars.

The company also highlighted that NIM is supported by data center infrastructure software providers VMware, Nutanix, Red Hat and Canonical as well as AI tools and MLOPs providers like Amazon SageMaker, Microsoft Azure AI and Domino Data Lab.

Global system integrators and service delivery partners such as Accenture, Deloitte, Quantiphi, Tata Consultancy Services and Wipro plan to use NIM to help businesses build and deploy generative AI applications, Nvidia added.

NIM is available to businesses through the Nvidia AI Enterprise software suite, which costs $4,500 per GPU per year. It’s also available to members of the Nvidia Developer Program, who can “access NIM for free for research, development and testing on their preferred infrastructure,” the company said.

Source link

lol

With Nvidia, Intel and AMD signaling that the semiconductor industry is entering a new era of hyperdrive, Computex 2024 was an event not to miss for channel partners who are building businesses around next-generation PCs and data centers, especially when it comes to AI. The future of AI computing was on full display at this…