Beware of fake AI tools masking a very real malware threat

by nlqip

Generative AI (GenAI) is making waves across the world. Its popularity and widespread use has also attracted the attention of cybercriminals, leading to various cyberthreats. Yet much discussion around threats associated with tools like ChatGPT has focused on how the technology can be misused to help fraudsters create convincing phishing messages, produce malicious code or probe for vulnerabilities.

Perhaps fewer people are talking about the use of GenAI as a lure and a Trojan horse in which to hide malware. Examples are not too difficult to come by. Last year, for instance, we wrote about a campaign that urged Facebook users to try out the latest version of Google’s legitimate AI tool “Bard”; instead, the ads served a malicious imposter tool.

Such campaigns are examples of a worrying trend, and they’re clearly not going anywhere. It’s, therefore, key to understand how they work, learn to spot the warning signs, and take precautions so that your identity and finances aren’t at risk.

How are the bad guys using GenAI as a lure?

Cybercriminals have various ways of tricking you into installing malware disguised as GenAI apps. These include:

Phishing sites

In the second half of 2023, ESET blocked over 650,000 attempts to access malicious domains containing “chapgpt” or similar text. Victims most likely arrive there after clicking through from a link on social media, or via an email/mobile message. Some of these phishing pages may contain links to install malware disguised as GenAI software.

Web browser extensions

ESET’s H1 2024 threat report details a malicious browser extension which users are tricked into installing after being lured by Facebook ads promising to take them to the official website of OpenAI’s Sora or Google’s Gemini. Although the extension masquerades as Google Translate, it is actually an infostealer known as “Rilide Stealer V4,” which is designed to harvest users’ Facebook credentials.

Since August 2023, ESET telemetry recorded over 4,000 attempts to install the malicious extension. Other malicious extensions claim to offer GenAI functionality, and may actually do so in a limited form, as well as deliver malware, according to Meta.

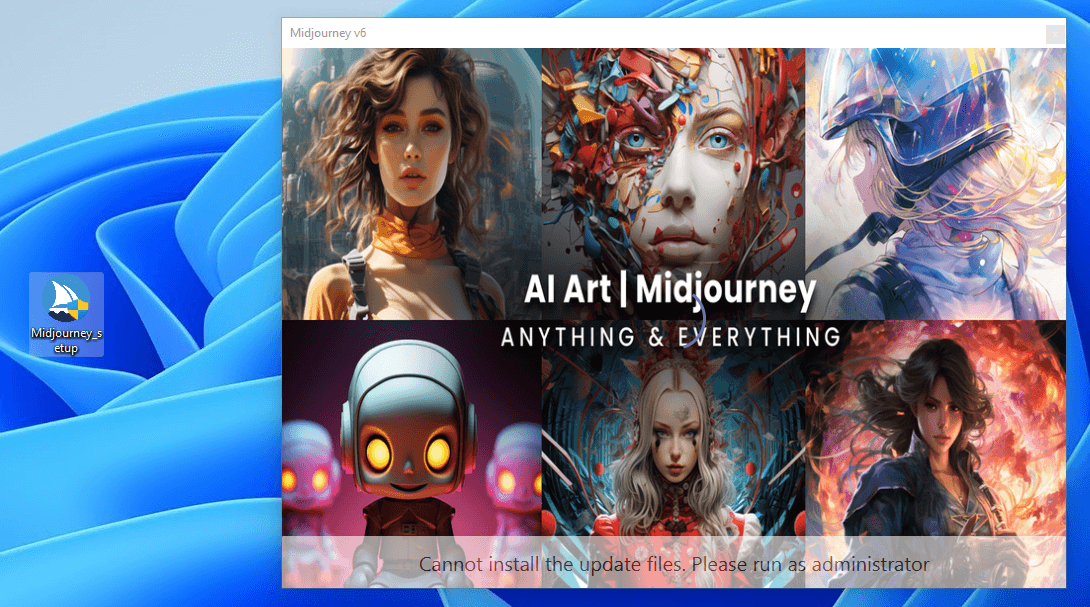

Fake apps

There have also been various reports of fake GenAI apps posted especially to mobile app stores, with many of these apps containing malware. Some are laden with malicious software designed to steal sensitive information from the user’s device. This can include login credentials, personal identification details, financial information, and more.

Others are scams designed to generate revenue for the developer by promising advanced AI capabilities, often for a fee. Once downloaded, they may bombard users with ads, solicit in-app purchases, or require subscriptions for services that are either non-existent or of extremely poor quality.

Malicious ads

Malicious actors are utilizing the popularity of GenAI tools to trick users into clicking on malicious advertising. Malicious Facebook ads are particularly prevalent. Meta warned last year that many of these campaigns are designed to compromise “businesses with access to ad accounts across the internet.”

Threat actors hijack a legitimate account or page, change the profile information to make it appears as if an authentic ChatGPT or other GenAI-branded page, and then use the accounts to run fake ads. These offer links to the latest version of GenAI tools, but in reality deploy infostealer malware, according to researchers.

The art of the lure

Humans are social creatures. We want to believe the stories we’re told. We’re also covetous. We want to get hold of the latest gadgets and apps. Threat actors exploit our greed, our fear of missing out, our credulity and our curiosity to get us to click on malicious links or download apps with malware hidden inside.

But for us to hit that install button, what’s on offer has to be pretty head turning, and – like all the best lies – it has to be grounded in a kernel of truth. Social engineers are particularly adept at mastering these dark arts – persuading us to click on salacious news stories about celebrities, or current affairs (remember those tall tales about fake COVID-19 vaccines?). Sometimes they’ll offer us something for free, at an unbelievable discount, or before anyone else gets it. As we explained here, we fall for these tricks because:

- We’re in a hurry, especially if we’re viewing the content on our mobile device

- They’re good storytellers, and are increasingly fluent, using (ironically) GenAI to tell their stories seamlessly in multiple languages

- We love to get something for nothing, even if it’s too good to be true

- The bad guys are good at sharing knowledge on what works and what doesn’t, while we’re less good at seeking out or taking advice

- We’re hardwired to respect authority, or at least the legitimacy of an offer, as long as it’s “officially” branded

When it comes to GenAI, malware-slingers are getting increasingly sophisticated. They use multiple channels to spread their lies. And they’re disguising malware as everything from ChatGPT and video creator Sora AI, to image generator Midjourney, DALL-E and photo editor Evoto. Many of the versions they tout aren’t yet available, which draws in the victim: “ChatGPT 5” or “DALL-E 3” for example.

They ensure malware continues to fly under the radar by regularly adapting their payloads to avoid detection by security tools. And they take a great deal of time and effort to ensure their lures (such as Facebook ads) look the part. If it doesn’t look official, who’s going to download it?

What could be at risk?

So what’s the worst that could happen? If you click to download a fake GenAI app on your mobile or a website and it installs malware, what’s the end goal for the bad guys? In many cases it’s an info-stealer. These pieces of malware are designed, as the name suggests, to harvest sensitive information. It could include credentials for your online accounts, such as work log-ins, or stored credit cards, session cookies (to bypass multifactor authentication), assets stored in crypto wallets, data streams from instant messaging apps, and much more.

It’s not just about info-stealer malware, of course. Cybercriminals could theoretically hide any type of malware in apps and malicious links, including ransomware and remote access Trojans (RATs). For the victim, this could lead to:

- A hacker gaining complete remote control over your PC/mobile phone and anything stored on it. They could use access to steal your most sensitive personal and financial information, or turn your machine into a “zombie” computer to launch attacks on others

- They could use your personal information for identity fraud which can be extremely distressing, not to mention expensive, for the victim

- They could use financial and identity details to obtain new credit lines in your name, or to steal crypto assets and access and drain bank accounts

- They could even use your work credentials to launch an attack on your employer, or a partner/supplier organization. A recent digital extortion campaign which used infostealer malware to gain access to Snowflake accounts led to the compromise of tens of millions of customer details

How to avoid malicious GenAI lures

Some tried-and-tested best practices should keep you on the right track and away from GenAI threats. Consider the following:

- Only install apps from official app stores

Google Play and the Apple App Store have rigorous vetting processes and regular monitoring to weed out malicious apps. Avoid downloading apps from third-party websites or unofficial sources, as they are far more likely to host malicious wares.

- Double check the developers behind apps and any reviews of their software

Before downloading an app, verify the developer’s credentials and look for other apps they have developed and read user reviews. Suspicious apps often have poorly written descriptions, limited developer history, and negative feedback highlighting issues.

- Be wary of clicking on digital ads

Digital ads, especially on social media platforms like Facebook, can be a common vector for distributing malicious apps. Instead of clicking on ads, directly search for the app or tool in your official app store to ensure you’re getting the legitimate version.

- Check web browser extensions before installing them

Web browser extensions can enhance your web experience but can also pose security risks. Check the developer’s background and read reviews before installing any extensions. Stick to well-known developers and extensions with high ratings and substantial user feedback.

- Use comprehensive security software from a reputable vendor

Ensure you have robust security software from a reputable vendor installed on your PC and all mobile devices. This provides real-time protection against malware, phishing attempts, and other online threats.

Phishing remains a perennial threat. Be cautious of unsolicited messages that prompt you to click on links or open attachments. Verify the sender’s identity before interacting with any email, text, or social media message that appears suspicious.

- Enable multi-factor authentication (MFA) for all your online accounts

MFA adds an extra layer of security to your online accounts by requiring multiple verification methods. Enable MFA wherever possible to protect your accounts even if your password is compromised.

As shown above, cybercriminals can’t resist exploiting the excitement around new releases. If you see an offer to download a new version of a GenAI tool, verify its availability through official channels before proceeding. Check the official website or trusted news sources to confirm the release.

GenAI is changing the world around us at a rapid pace. Make sure it doesn’t change yours for the worse.

Source link

lol

Generative AI (GenAI) is making waves across the world. Its popularity and widespread use has also attracted the attention of cybercriminals, leading to various cyberthreats. Yet much discussion around threats associated with tools like ChatGPT has focused on how the technology can be misused to help fraudsters create convincing phishing messages, produce malicious code or…

Recent Posts

- Windows 10 KB5046714 update fixes bug preventing app uninstalls

- Eight Key Takeaways From Kyndryl’s First Investor Day

- QNAP pulls buggy QTS firmware causing widespread NAS issues

- N-able Exec: ‘Cybersecurity And Compliance Are A Team Sport’

- Hackers breach US firm over Wi-Fi from Russia in ‘Nearest Neighbor Attack’